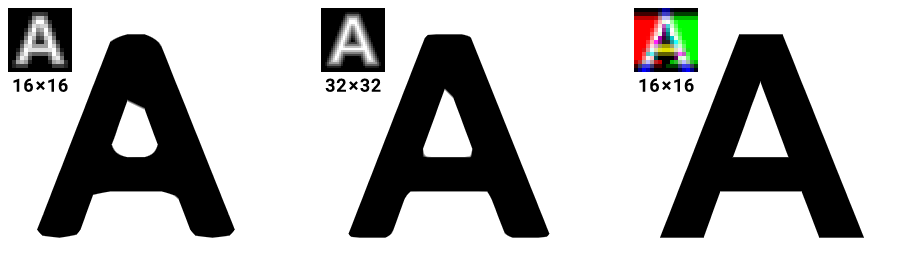

Today’s journey is Anti-Aliasing and the destination is Analytical Anti-Aliasing. Getting rid of rasterization jaggies is an art-form with decades upon decades of maths, creative techniques and non-stop innovation. With so many years of research and development, there are many flavors.

From the simple but resource intensive SSAA, over theory dense SMAA, to using machine learning with DLAA. Same goal - vastly different approaches. We’ll take a look at how they work, before introducing a new way to look a the problem - the ✨analytical🌟 way. The perfect Anti-Aliasing exists and is simpler than you think.

Having implemented it multiple times over the years, I'll also share some juicy secrets I have never read anywhere before.

The Setup #

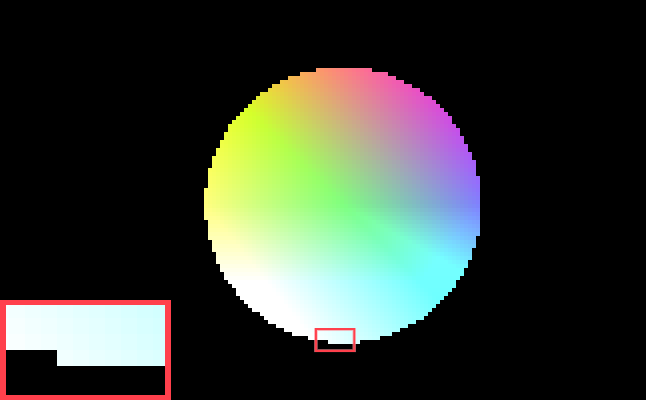

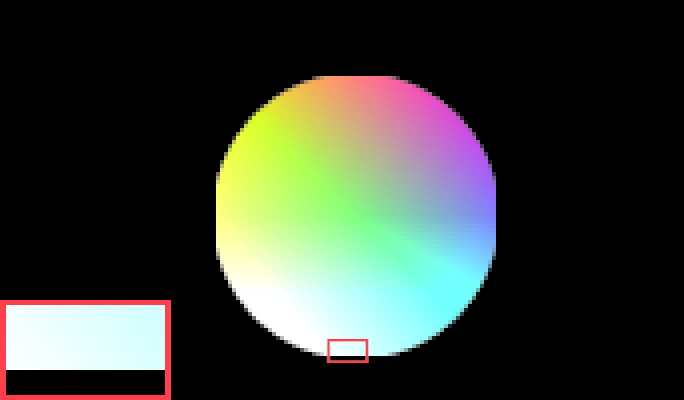

To understand the Anti-Aliasing algorithms, we will implement them along the way! Following WebGL canvases draw a moving circle. Anti-Aliasing cannot be fully understood with just images, movement is essential. The red box has 4x zoom. Rendering is done at native resolution of your device, important to judge sharpness.

Please pixel-peep to judge sharpness and aliasing closely. Resolution of your screen too high to see aliasing? Lower the resolution with the following buttons, which will integer-scale the rendering.

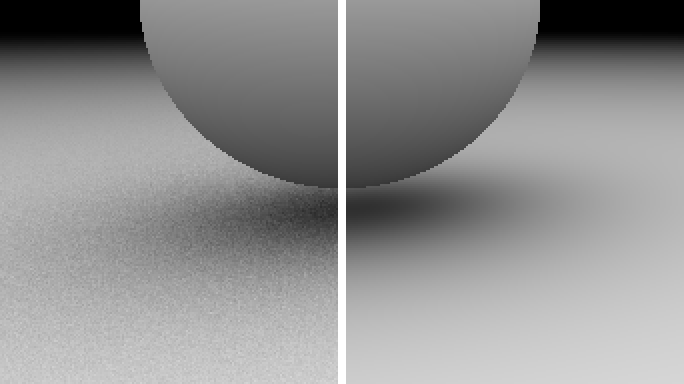

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader circle.vs

/* Our Vertex data for the Quad */ attribute vec2 vtx; attribute vec3 col; /* The coordinates that will be used to for our drawing operations */ varying vec2 uv; /* Color for the fragment shader */ varying vec3 color; /* Aspect ratio */ uniform float aspect_ratio; /* Position offset for the animation */ uniform vec2 offset; /* Size of the Unit Quad */ uniform float size; void main() { /* Assign the verticies to be used as the distance field for drawing. This will be linearly interpolated before going to the fragment shader */ uv = vtx; /* Sending some nice color to the fragment shader */ color = col; vec2 vertex = vtx; /* correct for aspect ratio */ vertex.x *= aspect_ratio; /* Shrink the Quad and thus the "canvas", that the circle is drawn on */ vertex *= size; /* Make the circle move in a circle, heh :] */ vertex += offset; /* Vertex Output */ gl_Position = vec4(vertex, 0.0, 1.0); }WebGL Fragment Shader circle.fs

precision mediump float; /* uv coordinates from the vertex shader */ varying vec2 uv; /* color from the vertex shader */ varying vec3 color; void main(void) { /* Clamped and scaled uv.y added to color simply to make the bottom of the circle white, so the contrast is high and you can see strong aliasing */ vec3 finalColor = color + clamp( - uv.y * 0.4, 0.0, 1.0); /* Discard fragments outside radius 1 from the center */ if (length(uv) < 1.0) gl_FragColor = vec4(finalColor, 1.0); else discard; }WebGL Javascript circleSimple.js

function setupSimple(canvasId, circleVtxSrc, circleFragSrc, simpleColorFragSrc, blitVtxSrc, blitFragSrc, redVtxSrc, redFragSrc, radioName, showQuadOpt) { /* Init */ const canvas = document.getElementById(canvasId); let circleDrawFramebuffer, frameTexture; let buffersInitialized = false; let showQuad = false; let resDiv = 1; const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false, antialias: false, alpha: true, } ); /* Render Resolution */ const radios = document.querySelectorAll(`input[name="${radioName}"]`); radios.forEach(radio => { /* Force set to 1 to fix a reload bug in Firefox Android */ if (radio.value === "1") radio.checked = true; radio.addEventListener('change', (event) => { resDiv = event.target.value; stopRendering(); startRendering(); }); }); /* Show Quad instead of circle choise */ const showQuadOption = document.querySelectorAll(`input[name="${showQuadOpt}"]`); showQuadOption.forEach(radio => { /* Force set to 1 to fix a reload bug in Firefox Android */ if (radio.value === "false") radio.checked = true; radio.addEventListener('change', (event) => { showQuad = (event.target.value === "true"); stopRendering(); startRendering(); }); }); /* Shaders */ /* Circle Shader */ const circleShd = compileAndLinkShader(gl, circleVtxSrc, circleFragSrc); const aspect_ratioLocation = gl.getUniformLocation(circleShd, "aspect_ratio"); const offsetLocationCircle = gl.getUniformLocation(circleShd, "offset"); const sizeLocationCircle = gl.getUniformLocation(circleShd, "size"); /* SimpleColor Shader */ const simpleColorShd = compileAndLinkShader(gl, circleVtxSrc, simpleColorFragSrc); const aspect_ratioLocationSimple = gl.getUniformLocation(simpleColorShd, "aspect_ratio"); const offsetLocationCircleSimple = gl.getUniformLocation(simpleColorShd, "offset"); const sizeLocationCircleSimple = gl.getUniformLocation(simpleColorShd, "size"); /* Blit Shader */ const blitShd = compileAndLinkShader(gl, blitVtxSrc, blitFragSrc); const transformLocation = gl.getUniformLocation(blitShd, "transform"); const offsetLocationPost = gl.getUniformLocation(blitShd, "offset"); /* Simple Red Box */ const redShd = compileAndLinkShader(gl, redVtxSrc, redFragSrc); const transformLocationRed = gl.getUniformLocation(redShd, "transform"); const offsetLocationRed = gl.getUniformLocation(redShd, "offset"); const aspect_ratioLocationRed = gl.getUniformLocation(redShd, "aspect_ratio"); const thicknessLocation = gl.getUniformLocation(redShd, "thickness"); const pixelsizeLocation = gl.getUniformLocation(redShd, "pixelsize"); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, unitQuad, gl.STATIC_DRAW); gl.vertexAttribPointer(0, 2, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 0); gl.vertexAttribPointer(1, 3, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); gl.enableVertexAttribArray(0); gl.enableVertexAttribArray(1); setupTextureBuffers(); const circleOffsetAnim = new Float32Array([ 0.0, 0.0 ]); let aspect_ratio = 0; let last_time = 0; let redrawActive = false; function setupTextureBuffers() { gl.deleteFramebuffer(circleDrawFramebuffer); circleDrawFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); frameTexture = setupTexture(gl, canvas.width / resDiv, canvas.height / resDiv, frameTexture, gl.NEAREST); gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, frameTexture, 0); buffersInitialized = true; } gl.enable(gl.BLEND); gl.blendFunc(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA); function redraw(time) { redrawActive = true; if (!buffersInitialized) { setupTextureBuffers(); } last_time = time; /* Setup PostProcess Framebuffer */ gl.viewport(0, 0, canvas.width / resDiv, canvas.height / resDiv); gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); gl.clear(gl.COLOR_BUFFER_BIT); gl.useProgram(circleShd); /* Draw Circle Animation */ gl.uniform1f(aspect_ratioLocation, aspect_ratio); var radius = 0.1; var speed = (time / 10000) % Math.PI * 2; circleOffsetAnim[0] = radius * Math.cos(speed) + 0.1; circleOffsetAnim[1] = radius * Math.sin(speed); gl.uniform2fv(offsetLocationCircle, circleOffsetAnim); gl.uniform1f(sizeLocationCircle, circleSize); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); if (showQuad) { gl.useProgram(simpleColorShd); gl.uniform1f(aspect_ratioLocationSimple, aspect_ratio); gl.uniform2fv(offsetLocationCircleSimple, circleOffsetAnim); gl.uniform1f(sizeLocationCircleSimple, circleSize); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); } gl.viewport(0, 0, canvas.width, canvas.height); gl.useProgram(blitShd); gl.bindFramebuffer(gl.FRAMEBUFFER, null); /* Simple Passthrough */ gl.uniform4f(transformLocation, 1.0, 1.0, 0.0, 0.0); gl.uniform2f(offsetLocationPost, 0.0, 0.0); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Scaled image in the bottom left */ gl.uniform4f(transformLocation, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationPost, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Draw Red box for viewport illustration */ gl.useProgram(redShd); gl.uniform1f(aspect_ratioLocationRed, (1.0 / aspect_ratio) - 1.0); gl.uniform1f(thicknessLocation, 0.2); gl.uniform1f(pixelsizeLocation, (1.0 / canvas.width) * 50); gl.uniform4f(transformLocationRed, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationRed, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.uniform1f(thicknessLocation, 0.1); gl.uniform1f(pixelsizeLocation, 0.0); gl.uniform4f(transformLocationRed, 0.5, 0.5, 0.0, 0.0); gl.uniform2f(offsetLocationRed, -0.75, -0.75); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); redrawActive = false; } let isRendering = false; let animationFrameId; function onResize() { const dipRect = canvas.getBoundingClientRect(); const width = Math.round(devicePixelRatio * dipRect.right) - Math.round(devicePixelRatio * dipRect.left); const height = Math.round(devicePixelRatio * dipRect.bottom) - Math.round(devicePixelRatio * dipRect.top); if (canvas.width !== width || canvas.height !== height) { canvas.width = width; canvas.height = height; setupTextureBuffers(); aspect_ratio = 1.0 / (width / height); stopRendering(); startRendering(); } } window.addEventListener('resize', onResize, true); onResize(); function renderLoop(time) { if (isRendering) { redraw(time); animationFrameId = requestAnimationFrame(renderLoop); } } function startRendering() { /* Start rendering, when canvas visible */ isRendering = true; renderLoop(last_time); } function stopRendering() { /* Stop another redraw being called */ isRendering = false; cancelAnimationFrame(animationFrameId); while (redrawActive) { /* Spin on draw calls being processed. To simplify sync. In reality this code is block is never reached, but just in case, we have this here. */ } /* Force the rendering pipeline to sync with CPU before we mess with it */ gl.finish(); /* Delete the important buffer to free up memory */ gl.deleteTexture(frameTexture); gl.deleteFramebuffer(circleDrawFramebuffer); buffersInitialized = false; } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) startRendering(); } else { stopRendering(); } }); } /* Only render when the canvas is actually on screen */ let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); }

Let’s start out simple. Using GLSL Shaders we tell the GPU of your device to draw a circle in the most simple and naive way possible, as seen in circle.fs above: If the length() from the middle point is bigger than 1.0, we discard the pixel.

The circle is blocky, especially at smaller resolutions. More painfully, there is strong “pixel crawling”, an artifact that’s very obvious when there is any kind of movement. As the circle moves, rows of pixels pop in and out of existence and the stair steps of the pixelation move along the side of the circle like beads of different speeds.

The low ¼ and ⅛ resolutions aren't just there for extreme pixel-peeping, but also to represent small elements or ones at large distance in 3D.

At lower resolutions these artifacts come together to destroy the circular form. The combination of slow movement and low resolution causes one side’s pixels to come into existence, before the other side’s pixels disappear, causing a wobble. Axis-alignment with the pixel grid causes “plateaus” of pixels at every 90° and 45° position.

Technical breakdown #

Understanding the GPU code is not necessary to follow this article, but will help to grasp whats happening when we get to the analytical bits.

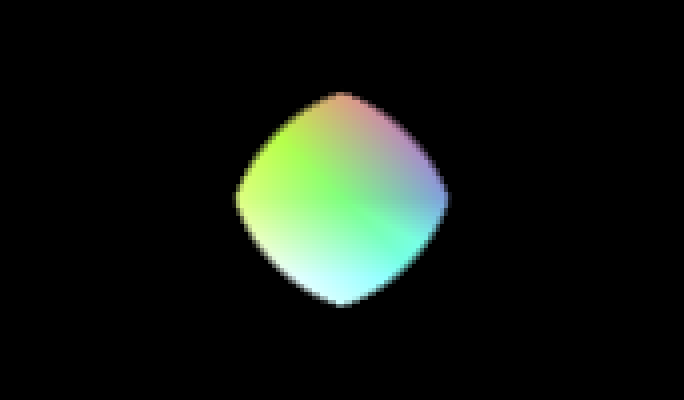

4 vertices making up a quad are sent to the GPU in the vertex shader circle.vs, where they are received as attribute vec2 vtx. The coordinates are of a “unit quad”, meaning the coordinates look like the following image. With one famous exception, all GPUs use triangles, so the quad is actually made up of two triangles.

The vertices here are given to the fragment shader circle.fs via varying vec2 uv. The fragment shader is called per fragment (here fragments are pixel-sized) and the varying is interpolated linearly with perspective corrected, barycentric coordinates, giving us a uv coordinate per pixel from -1 to +1 with zero at the center.

By performing the check if (length(uv) < 1.0) we draw our color for fragments inside the circle and reject fragments outside of it. What we are doing is known as “Alpha testing”. Without diving too deeply and just to hint at what’s to come, what we have created with length(uv) is the signed distance field of a point.

Just to clarify, the circle isn't "drawn with geometry", which would have finite resolution of the shape, depending on how many vertices we use. It's "drawn by the shader".

SSAA #

SSAA stands for Super Sampling Anti-Aliasing. Render it bigger, downsample to be smaller. The idea is as old as 3D rendering itself. In fact, the first movies with CGI all relied on this with the most naive of implementations. One example is the 1986 movie “Flight of the Navigator”, as covered by Captain Disillusion in the video below.

Excerpt from "Flight of the Navigator | VFXcool"

YouTube Video by Captain Disillusion

1986 did it, so can we. Implemented in mere seconds. Easy, right?

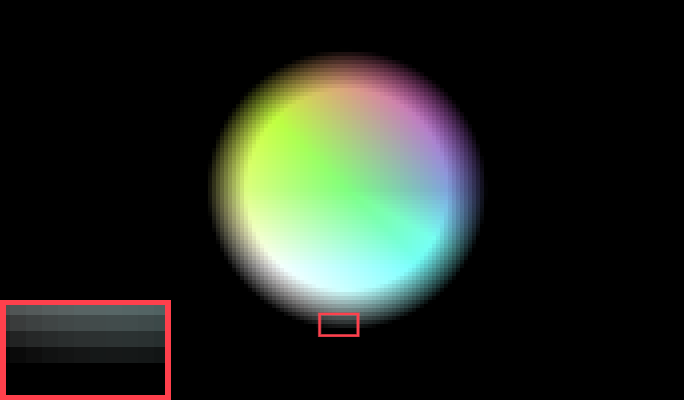

Screenshot, in case WebGL doesn't work

SSAA buffer Fragment Shader post.fs

precision mediump float; uniform sampler2D u_texture; varying vec2 texCoord; void main() { gl_FragColor = texture2D(u_texture, texCoord); }WebGL Javascript circleSSAA.js

function setupSSAA(canvasId, circleVtxSrc, circleFragSrc, postVtxSrc, postFragSrc, blitVtxSrc, blitFragSrc, redVtxSrc, redFragSrc, radioName) { /* Init */ const canvas = document.getElementById(canvasId); let frameTexture, circleDrawFramebuffer, frameTextureLinear; let buffersInitialized = false; let resDiv = 1; const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false, antialias: false, alpha: true, premultipliedAlpha: true } ); /* Setup Possibilities */ let renderbuffer = null; let resolveFramebuffer = null; /* Render Resolution */ const radios = document.querySelectorAll(`input[name="${radioName}"]`); radios.forEach(radio => { /* Force set to 1 to fix a reload bug in Firefox Android */ if (radio.value === "1") radio.checked = true; radio.addEventListener('change', (event) => { resDiv = event.target.value; stopRendering(); startRendering(); }); }); /* Shaders */ /* Circle Shader */ const circleShd = compileAndLinkShader(gl, circleVtxSrc, circleFragSrc); const aspect_ratioLocation = gl.getUniformLocation(circleShd, "aspect_ratio"); const offsetLocationCircle = gl.getUniformLocation(circleShd, "offset"); const sizeLocationCircle = gl.getUniformLocation(circleShd, "size"); /* Blit Shader */ const blitShd = compileAndLinkShader(gl, blitVtxSrc, blitFragSrc); const transformLocation = gl.getUniformLocation(blitShd, "transform"); const offsetLocationPost = gl.getUniformLocation(blitShd, "offset"); /* Post Shader */ const postShd = compileAndLinkShader(gl, postVtxSrc, postFragSrc); /* Simple Red Box */ const redShd = compileAndLinkShader(gl, redVtxSrc, redFragSrc); const transformLocationRed = gl.getUniformLocation(redShd, "transform"); const offsetLocationRed = gl.getUniformLocation(redShd, "offset"); const aspect_ratioLocationRed = gl.getUniformLocation(redShd, "aspect_ratio"); const thicknessLocation = gl.getUniformLocation(redShd, "thickness"); const pixelsizeLocation = gl.getUniformLocation(redShd, "pixelsize"); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, unitQuad, gl.STATIC_DRAW); gl.vertexAttribPointer(0, 2, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 0); gl.vertexAttribPointer(1, 3, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); gl.enableVertexAttribArray(0); gl.enableVertexAttribArray(1); setupTextureBuffers(); const circleOffsetAnim = new Float32Array([ 0.0, 0.0 ]); let aspect_ratio = 0; let last_time = 0; let redrawActive = false; gl.enable(gl.BLEND); function setupTextureBuffers() { gl.deleteFramebuffer(resolveFramebuffer); resolveFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, resolveFramebuffer); frameTexture = setupTexture(gl, canvas.width / resDiv, canvas.height / resDiv, frameTexture, gl.NEAREST); gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, frameTexture, 0); gl.deleteFramebuffer(circleDrawFramebuffer); circleDrawFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); frameTextureLinear = setupTexture(gl, (canvas.width / resDiv) * 2, (canvas.height / resDiv) * 2, frameTextureLinear, gl.LINEAR); gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, frameTextureLinear, 0); buffersInitialized = true; } function redraw(time) { redrawActive = true; if (!buffersInitialized) { setupTextureBuffers(); } last_time = time; gl.viewport(0, 0, (canvas.width / resDiv) * 2, (canvas.height / resDiv) * 2); /* Setup PostProcess Framebuffer */ gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); gl.clear(gl.COLOR_BUFFER_BIT); gl.useProgram(circleShd); /* Draw Circle Animation */ gl.uniform1f(aspect_ratioLocation, aspect_ratio); var radius = 0.1; var speed = (time / 10000) % Math.PI * 2; circleOffsetAnim[0] = radius * Math.cos(speed) + 0.1; circleOffsetAnim[1] = radius * Math.sin(speed); gl.uniform2fv(offsetLocationCircle, circleOffsetAnim); gl.uniform1f(sizeLocationCircle, circleSize); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.viewport(0, 0, canvas.width / resDiv, canvas.height / resDiv); gl.useProgram(postShd); gl.blendFunc(gl.ONE, gl.ONE_MINUS_SRC_ALPHA); gl.bindTexture(gl.TEXTURE_2D, frameTextureLinear); gl.bindFramebuffer(gl.FRAMEBUFFER, resolveFramebuffer); gl.clear(gl.COLOR_BUFFER_BIT); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.useProgram(blitShd); gl.bindFramebuffer(gl.FRAMEBUFFER, null); gl.bindTexture(gl.TEXTURE_2D, frameTexture); gl.viewport(0, 0, canvas.width, canvas.height); /* Simple Passthrough */ gl.uniform4f(transformLocation, 1.0, 1.0, 0.0, 0.0); gl.uniform2f(offsetLocationPost, 0.0, 0.0); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Scaled image in the bottom left */ gl.uniform4f(transformLocation, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationPost, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Draw Red box for viewport illustration */ gl.blendFunc(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA); gl.useProgram(redShd); gl.uniform1f(aspect_ratioLocationRed, (1.0 / aspect_ratio) - 1.0); gl.uniform1f(thicknessLocation, 0.2); gl.uniform1f(pixelsizeLocation, (1.0 / canvas.width) * 50); gl.uniform4f(transformLocationRed, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationRed, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.uniform1f(thicknessLocation, 0.1); gl.uniform1f(pixelsizeLocation, 0.0); gl.uniform4f(transformLocationRed, 0.5, 0.5, 0.0, 0.0); gl.uniform2f(offsetLocationRed, -0.75, -0.75); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); redrawActive = false; } let isRendering = false; let animationFrameId; function onResize() { const dipRect = canvas.getBoundingClientRect(); const width = Math.round(devicePixelRatio * dipRect.right) - Math.round(devicePixelRatio * dipRect.left); const height = Math.round(devicePixelRatio * dipRect.bottom) - Math.round(devicePixelRatio * dipRect.top); if (canvas.width !== width || canvas.height !== height) { canvas.width = width; canvas.height = height; setupTextureBuffers(); aspect_ratio = 1.0 / (width / height); stopRendering(); startRendering(); } } window.addEventListener('resize', onResize, true); onResize(); function renderLoop(time) { if (isRendering) { redraw(time); animationFrameId = requestAnimationFrame(renderLoop); } } function startRendering() { /* Start rendering, when canvas visible */ isRendering = true; renderLoop(last_time); } function stopRendering() { /* Stop another redraw being called */ isRendering = false; cancelAnimationFrame(animationFrameId); while (redrawActive) { /* Spin on draw calls being processed. To simplify sync. In reality this code is block is never reached, but just in case, we have this here. */ } /* Force the rendering pipeline to sync with CPU before we mess with it */ gl.finish(); /* Delete the important buffer to free up memory */ gl.deleteTexture(frameTexture); gl.deleteFramebuffer(circleDrawFramebuffer); gl.deleteRenderbuffer(renderbuffer); gl.deleteFramebuffer(resolveFramebuffer); buffersInitialized = false; } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) startRendering(); } else { stopRendering(); } }); } /* Only render when the canvas is actually on screen */ let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); }

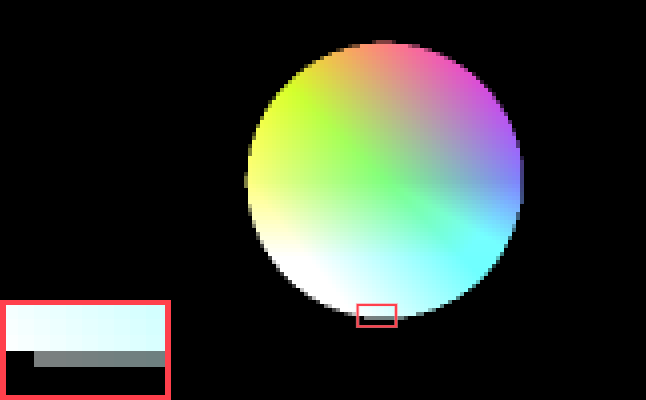

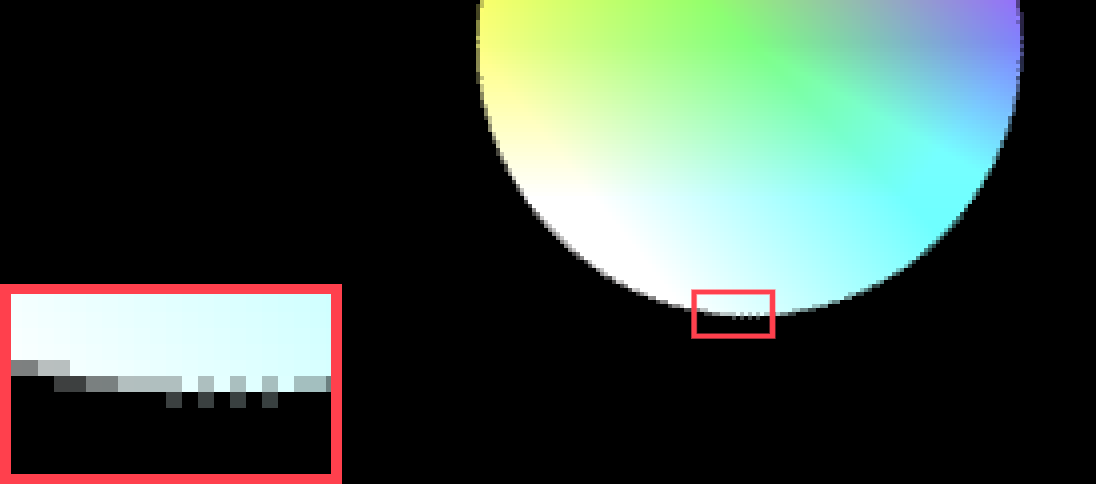

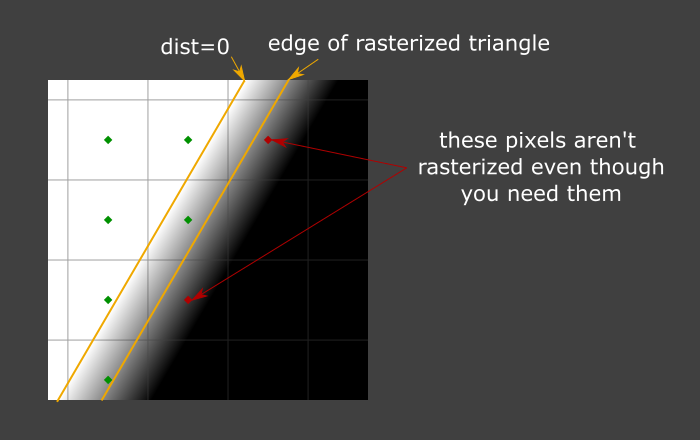

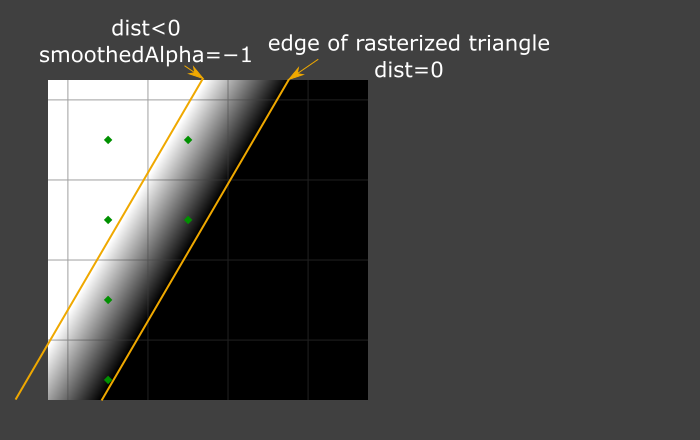

circleSSAA.js draws at twice the resolution to a texture, which fragment shader post.fs reads from at standard resolution with GL_LINEAR to perform SSAA. So we have four input pixels for every one output pixel we draw to the screen. But it’s somewhat strange: There is definitely Anti-Aliasing happening, but less than expected.

There should be 4 steps of transparency, but we only get two!

Especially at lower resolutions, we can see the circle does actually have 4 steps of transparency, but mainly at the 45° “diagonals” of the circle. A circle has of course no sides, but at the axis-aligned “bottom” there are only 2 steps of transparency: Fully Opaque and 50% transparent, the 25% and 75% transparency steps are missing.

Conceptually simple, actually hard #

We aren’t sampling against the circle shape at twice the resolution, we are sampling against the quantized result of the circle shape. Twice the resolution, but discrete pixels nonetheless. The combination of pixelation and sample placement doesn’t hold enough information where we need it the most: at the axis-aligned “flat parts”.

Four times the memory and four times the calculation requirement, but only a half-assed result.

Implementing SSAA properly is a minute craft. Here we are drawing to a 2x resolution texture and down-sampling it with linear interpolation. So actually, this implementation needs 5x the amount of VRAM. A proper implementation samples the scene multiple times and combines the result without an intermediary buffer.

With our implementation, we can't even do more than 2xSSAA with one texture read, as linear interpolation happens only with 2x2 samples.

To combat axis-alignment artifacts like with our circle above, we need to place our SSAA samples better. There are multiple ways to do so, all with pros and cons. To implement SSAA properly, we need deep integration with the rendering pipeline. For 3D primitives, this happens below API or engine, in the realm of vendors and drivers.

In fact, some of the best implementations were discovered by vendors on accident, like SGSSAA. There are also ways in which SSAA can make your scene look worse. Depending on implementation, SSAA messes with mip-map calculations. As a result the mip-map lod-bias may need adjustment, as explained in the article above.

WebXR UI package three-mesh-ui, a package mature enough to be used by Meta, uses shader-based rotated grid super sampling to achieve sharp text rendering in VR, as seen in the code.

MSAA #

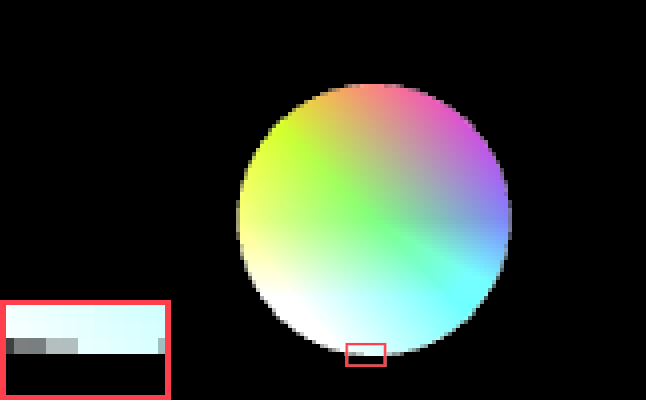

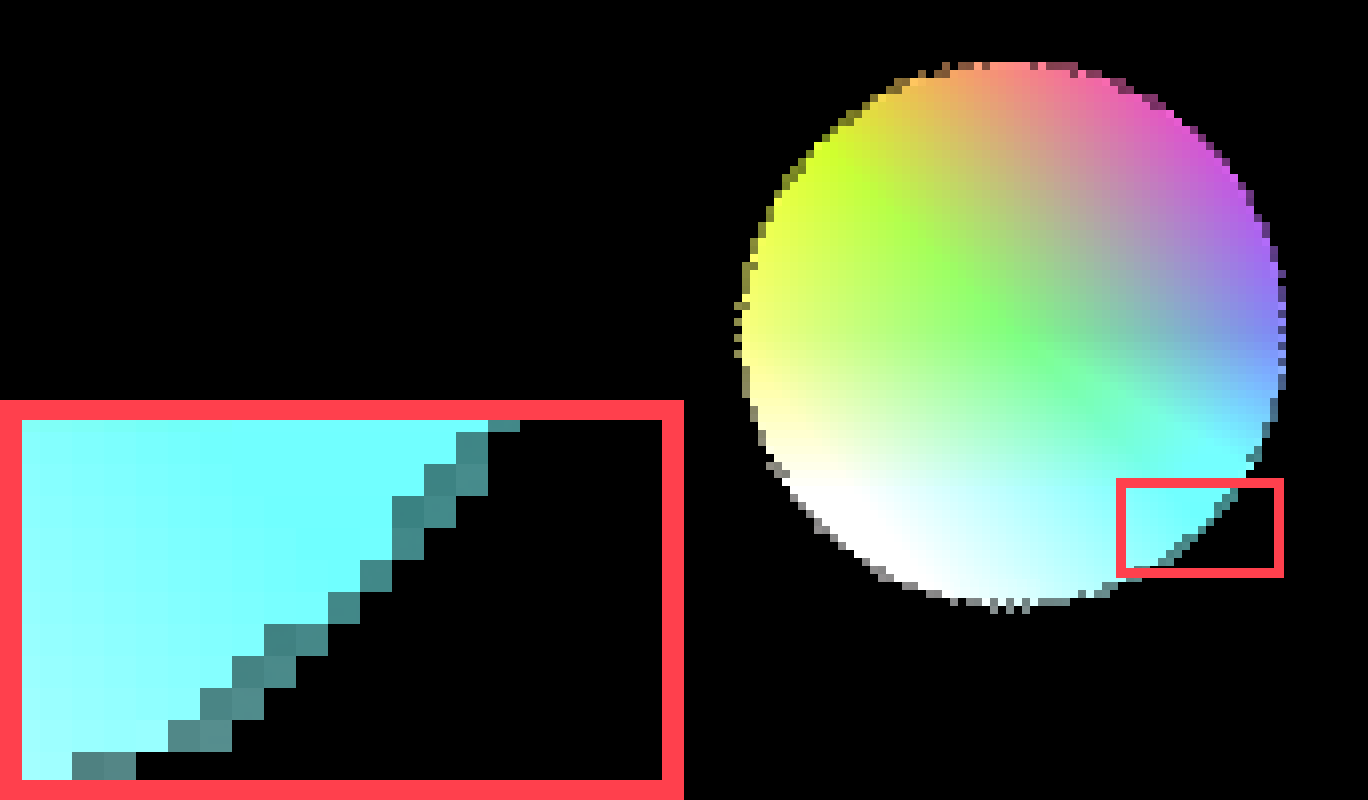

MSAA is super sampling, but only at the silhouette of models, overlapping geometry, and texture edges if “Alpha to Coverage” is enabled. MSAA is implemented by graphics vendors on the GPU in-hardware and what is supported depends on said hardware. In the select box below you can choose different MSAA levels for our circle.

There is up to MSAA x64, but what is available is implementation defined. WebGL 1 has no support, which is why the next canvas initializes a WebGL 2 context. In WebGL, NVIDIA limits MSAA to 8x on Windows, even if more is supported, whilst on Linux no such limit is in place. On smartphones you will only get exactly 4x, as discussed below.

MSAA 4x Screenshot, in case WebGL 2 doesn't work

WebGL Javascript circleMSAA.js

function setupMSAA(canvasId, circleVtxSrc, circleFragSrc, circleSimpleFragSrc, postVtxSrc, postFragSrc, blitVtxSrc, blitFragSrc, redVtxSrc, redFragSrc, radioName, radioSmoothSize) { /* Init */ const canvas = document.getElementById(canvasId); let frameTexture, circleDrawFramebuffer; let buffersInitialized = false; let resDiv = 1; let pixelSmoothSize = 1; const gl = canvas.getContext('webgl2', { preserveDrawingBuffer: false, antialias: false, alpha: true, premultipliedAlpha: true } ); /* Setup Possibilities */ let samples = 1; let renderbuffer = null; let resolveFramebuffer = null; const maxSamples = gl.getParameter(gl.MAX_SAMPLES); /* Enable the options in the MSAA dropdown based on maxSamples */ const msaaSelect = document.getElementById("MSAA"); for (let option of msaaSelect.options) { if (parseInt(option.value) <= maxSamples) { option.disabled = false; } } samples = parseInt(msaaSelect.value); /* Event listener for select dropdown */ msaaSelect.addEventListener('change', function () { /* Get new MSAA level and reset-init buffers */ samples = parseInt(msaaSelect.value); setupTextureBuffers(); }); /* Render Resolution */ const radios = document.querySelectorAll(`input[name="${radioName}"]`); radios.forEach(radio => { /* Force set to 1 to fix a reload bug in Firefox Android */ if (radio.value === "1") radio.checked = true; radio.addEventListener('change', (event) => { resDiv = event.target.value; stopRendering(); startRendering(); }); }); /* Smooth Size */ const radiosSmooth = document.querySelectorAll(`input[name="${radioSmoothSize}"]`); radiosSmooth.forEach(radio => { /* Force set to 1 to fix a reload bug in Firefox Android */ if (radio.value === "1") radio.checked = true; radio.addEventListener('change', (event) => { pixelSmoothSize = event.target.value; stopRendering(); startRendering(); }); }); /* Shaders */ /* Circle Shader */ const circleShd = compileAndLinkShader(gl, circleVtxSrc, circleFragSrc); const aspect_ratioLocation = gl.getUniformLocation(circleShd, "aspect_ratio"); const offsetLocationCircle = gl.getUniformLocation(circleShd, "offset"); const pixelSizeCircle = gl.getUniformLocation(circleShd, "pixelSize"); const sizeLocationCircle = gl.getUniformLocation(circleShd, "size"); const circleShd_step = compileAndLinkShader(gl, circleVtxSrc, circleSimpleFragSrc); const aspect_ratioLocation_step = gl.getUniformLocation(circleShd_step, "aspect_ratio"); const offsetLocationCircle_step = gl.getUniformLocation(circleShd_step, "offset"); const sizeLocationCircle_step = gl.getUniformLocation(circleShd_step, "size"); /* Blit Shader */ const blitShd = compileAndLinkShader(gl, blitVtxSrc, blitFragSrc); const transformLocation = gl.getUniformLocation(blitShd, "transform"); const offsetLocationPost = gl.getUniformLocation(blitShd, "offset"); /* Post Shader */ const postShd = compileAndLinkShader(gl, postVtxSrc, postFragSrc); /* Simple Red Box */ const redShd = compileAndLinkShader(gl, redVtxSrc, redFragSrc); const transformLocationRed = gl.getUniformLocation(redShd, "transform"); const offsetLocationRed = gl.getUniformLocation(redShd, "offset"); const aspect_ratioLocationRed = gl.getUniformLocation(redShd, "aspect_ratio"); const thicknessLocation = gl.getUniformLocation(redShd, "thickness"); const pixelsizeLocation = gl.getUniformLocation(redShd, "pixelsize"); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, unitQuad, gl.STATIC_DRAW); gl.vertexAttribPointer(0, 2, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 0); gl.vertexAttribPointer(1, 3, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); gl.enableVertexAttribArray(0); gl.enableVertexAttribArray(1); setupTextureBuffers(); const circleOffsetAnim = new Float32Array([ 0.0, 0.0 ]); let aspect_ratio = 0; let last_time = 0; let redrawActive = false; let animationFrameId; function setupTextureBuffers() { gl.deleteFramebuffer(circleDrawFramebuffer) circleDrawFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); gl.deleteRenderbuffer(renderbuffer); renderbuffer = gl.createRenderbuffer(); gl.bindRenderbuffer(gl.RENDERBUFFER, renderbuffer); const errorMessageElement = document.getElementById('sampleErrorMessage'); /* Here we need two branches because of implementation specific shenanigans. Mobile chips will always force any call to renderbufferStorageMultisample() to be 4x MSAA, so to have a noAA comparison, we split the Framebuffer setup */ if (samples != 1) { gl.renderbufferStorageMultisample(gl.RENDERBUFFER, samples, gl.RGBA8, canvas.width / resDiv, canvas.height / resDiv); gl.framebufferRenderbuffer(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.RENDERBUFFER, renderbuffer); const actualSamples = gl.getRenderbufferParameter( gl.RENDERBUFFER, gl.RENDERBUFFER_SAMPLES ); if (samples !== actualSamples) { errorMessageElement.style.display = 'block'; errorMessageElement.textContent = `⚠️ You chose MSAAx${samples}, but the graphics driver forced it to MSAAx${actualSamples}. You are probably on a mobile GPU, where this behavior is expected.`; } else { errorMessageElement.style.display = 'none'; } } else { errorMessageElement.style.display = 'none'; } gl.deleteFramebuffer(resolveFramebuffer); resolveFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.DRAW_FRAMEBUFFER, resolveFramebuffer); frameTexture = setupTexture(gl, canvas.width / resDiv, canvas.height / resDiv, frameTexture, gl.NEAREST); gl.framebufferTexture2D(gl.DRAW_FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, frameTexture, 0); buffersInitialized = true; } function redraw(time) { redrawActive = true; if (!buffersInitialized) { setupTextureBuffers(); } last_time = time; gl.disable(gl.BLEND); gl.enable(gl.SAMPLE_ALPHA_TO_COVERAGE); /* Setup PostProcess Framebuffer */ if (samples == 1) gl.bindFramebuffer(gl.FRAMEBUFFER, resolveFramebuffer); else gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); gl.clear(gl.COLOR_BUFFER_BIT); if (samples == 1) gl.useProgram(circleShd_step); else gl.useProgram(circleShd); gl.viewport(0, 0, canvas.width / resDiv, canvas.height / resDiv); /* Draw Circle Animation */ var radius = 0.1; var speed = (time / 10000) % Math.PI * 2; circleOffsetAnim[0] = radius * Math.cos(speed) + 0.1; circleOffsetAnim[1] = radius * Math.sin(speed); if (samples == 1) { /* Here we need two branches because of implementation specific shenanigans. Mobile chips will always force any call to renderbufferStorageMultisample() to be 4x MSAA, so to have a noAA comparison, we split the demo across two shaders */ gl.uniform2fv(offsetLocationCircle_step, circleOffsetAnim); gl.uniform1f(aspect_ratioLocation_step, aspect_ratio); gl.uniform1f(sizeLocationCircle_step, circleSize); } else { gl.uniform2fv(offsetLocationCircle, circleOffsetAnim); gl.uniform1f(aspect_ratioLocation, aspect_ratio); gl.uniform1f(sizeLocationCircle, circleSize); gl.uniform1f(pixelSizeCircle, (2.0 / (canvas.height / resDiv)) * pixelSmoothSize); } gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.disable(gl.SAMPLE_ALPHA_TO_COVERAGE); gl.enable(gl.BLEND); gl.viewport(0, 0, canvas.width, canvas.height); if (samples !== 1) { gl.useProgram(postShd); gl.blendFunc(gl.ONE, gl.ONE_MINUS_SRC_ALPHA); /* Resolve the MSAA framebuffer to a regular texture */ gl.bindFramebuffer(gl.READ_FRAMEBUFFER, circleDrawFramebuffer); gl.bindFramebuffer(gl.DRAW_FRAMEBUFFER, resolveFramebuffer); gl.blitFramebuffer( 0, 0, canvas.width, canvas.height, 0, 0, canvas.width, canvas.height, gl.COLOR_BUFFER_BIT, gl.LINEAR ); } gl.useProgram(blitShd); gl.bindFramebuffer(gl.FRAMEBUFFER, null); gl.bindTexture(gl.TEXTURE_2D, frameTexture); /* Simple Passthrough */ gl.uniform4f(transformLocation, 1.0, 1.0, 0.0, 0.0); gl.uniform2f(offsetLocationPost, 0.0, 0.0); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Scaled image in the bottom left */ gl.uniform4f(transformLocation, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationPost, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Draw Red box for viewport illustration */ gl.blendFunc(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA); gl.useProgram(redShd); gl.uniform1f(aspect_ratioLocationRed, (1.0 / aspect_ratio) - 1.0); gl.uniform1f(thicknessLocation, 0.2); gl.uniform1f(pixelsizeLocation, (1.0 / canvas.width) * 50); gl.uniform4f(transformLocationRed, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationRed, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.uniform1f(thicknessLocation, 0.1); gl.uniform1f(pixelsizeLocation, 0.0); gl.uniform4f(transformLocationRed, 0.5, 0.5, 0.0, 0.0); gl.uniform2f(offsetLocationRed, -0.75, -0.75); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); redrawActive = false; } function onResize() { const dipRect = canvas.getBoundingClientRect(); const width = Math.round(devicePixelRatio * dipRect.right) - Math.round(devicePixelRatio * dipRect.left); const height = Math.round(devicePixelRatio * dipRect.bottom) - Math.round(devicePixelRatio * dipRect.top); if (canvas.width !== width || canvas.height !== height) { canvas.width = width; canvas.height = height; setupTextureBuffers(); aspect_ratio = 1.0 / (width / height); } } window.addEventListener('resize', onResize, true); onResize(); let isRendering = false; function renderLoop(time) { if (isRendering) { redraw(time); animationFrameId = requestAnimationFrame(renderLoop); } } function startRendering() { /* Start rendering, when canvas visible */ isRendering = true; renderLoop(last_time); } function stopRendering() { /* Stop another redraw being called */ isRendering = false; cancelAnimationFrame(animationFrameId); while (redrawActive) { /* Spin on draw calls being processed. To simplify sync. In reality this code is block is never reached, but just in case, we have this here. */ } /* Force the rendering pipeline to sync with CPU before we mess with it */ gl.finish(); /* Delete the important buffer to free up memory */ gl.deleteTexture(frameTexture); gl.deleteFramebuffer(circleDrawFramebuffer); gl.deleteRenderbuffer(renderbuffer); gl.deleteFramebuffer(resolveFramebuffer); buffersInitialized = false; } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) startRendering(); } else { stopRendering(); } }); } /* Only render when the canvas is actually on screen */ let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); }

What is edge smoothing and how does MSAA even know what to sample against? For now we skip the shader code and implementation. First let's take a look at MSAA's pros and cons in general.

Implementation specific headaches #

We rely on hardware to do the Anti-Aliasing, which obviously leads to the problem that user hardware may not support what we need. The sampling patterns MSAA uses may also do things we don’t expect. Depending on what your hardware does, you may see the circle’s edge transparency steps appearing “in the wrong order”.

When MSAA became required with OpenGL 3 & DirectX 10 era of hardware, support was especially hit & miss. Even latest Intel GMA iGPUs expose the OpenGL extension EXT_framebuffer_multisample, but don’t in-fact support MSAA, which led to confusion. But also in more recent smartphones, support just wasn’t that clear-cut.

Mobile chips support exactly MSAAx4 and things are weird. Android will let you pick 2x, but the driver will force 4x anyways. iPhones & iPads do something rather stupid: Choosing 2x will make it 4x, but transparency will be rounded to the nearest 50% multiple, leading to double edges in our example. There is hardware specific reason:

Performance cost: (maybe) Zero #

Looking at modern video games, one might believe that MSAA is of the past. It usually brings a hefty performance penalty after all. Surprisingly, it’s still the king under certain circumstances and in very specific situations, even performance free.

As a gamer, this goes against instinct...

Excerpt from "Developing High Performance Games for Different Mobile VR Platforms"

GDC 2017 talk by Rahul Prasad

Rahul Prasad: Use MSAA […] It’s actually not as expensive on mobile as it is on desktop, it’s one of the nice things you get on mobile. […] On some (mobile) GPUs 4x (MSAA) is free, so use it when you have it.

As explained by Rahul Prasad in the above talk, in VR 4xMSAA is a must and may come free on certain mobile GPUs. The specific reason would derail the blog post, but in case you want to go down that particular rabbit hole, here is Epic Games’ Niklas Smedberg giving a run-down.

Excerpt from "Next-Generation AAA Mobile Rendering"

GDC 2014 talk by Niklas Smedberg and Timothy Lottes

In short, this is possible under the condition of forward rendering with geometry that is not too dense and the GPU having tiled-based rendering architecture, which allows the GPU to perform MSAA calculations without heavy memory access and thus latency hiding the cost of the calculation. Here’s a deep-dive, if you are interested.

A complex toolbox #

MSAA gives you access to the samples, making custom MSAA filtering curves a possibility. It also allows you to merge both standard mesh-based and signed-distance-field rendering via alpha to coverage. This complex features set made possible the most out-of-the-box thinking I ever witnessed in graphics programming:

Assassin’s Creed Unity used MSAA to render at half resolution and reconstruct only some buffers to full-res from MSAA samples, as described on page 48 of the talk “GPU-Driven Rendering Pipelines” by Ulrich Haar and Sebastian Aaltonen. Kinda like variable rate shading, but implemented with duct-tape and without vendor support.

The brain-melting lengths to which graphics programmers go to utilize hardware acceleration to the last drop has me sometimes in awe.

Post-Process Anti-Aliasing #

In 2009 a paper by Alexander Reshetov struck the graphics programming world like a ton of bricks: take the blocky, aliased result of the rendered image, find edges and classify the pixels into tetris-like shapes with per-shape filtering rules and remove the blocky edge. Anti-Aliasing based on the morphology of pixels - MLAA was born.

Computationally cheap, easy to implement. Later it was refined with more emphasis on removing sub-pixel artifacts to become SMAA. It became a fan favorite, with an injector being developed early on to put SMAA into games that didn’t support it. Some considered these too blurry, the saying “vaseline on the screen” was coined.

It was the future, a sign of things to come. No more shaky hardware support. Like Fixed-Function pipelines died in favor of programmable shaders Anti-Aliasing too became "shader based".

FXAA #

We’ll take a close look at an algorithm that was inspired by MLAA, developed by Timothy Lottes. “Fast approximate anti-aliasing”, FXAA. In fact, when it came into wide circulation, it received some incredible press. Among others, Jeff Atwood pulled neither bold fonts nor punches in his 2011 blog post, later republished by Kotaku.

Jeff Atwood: The FXAA method is so good, in fact, it makes all other forms of full-screen anti-aliasing pretty much obsolete overnight. If you have an FXAA option in your game, you should enable it immediately and ignore any other AA options.

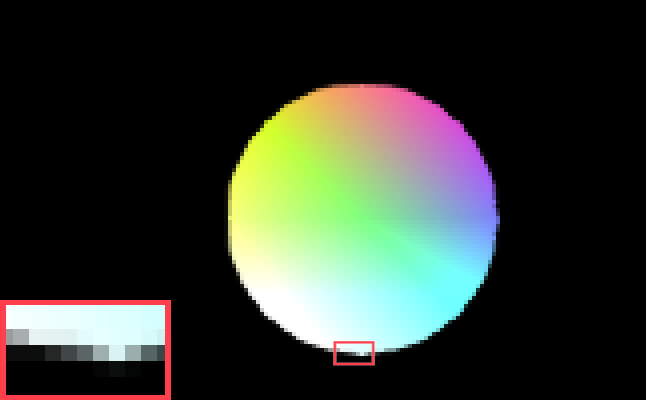

Let’s see what the hype was about. The final version publicly released was FXAA 3.11 on August 12th 2011 and the following demos are based on this. First, let’s take a look at our circle with FXAA doing the Anti-Aliasing at default settings.

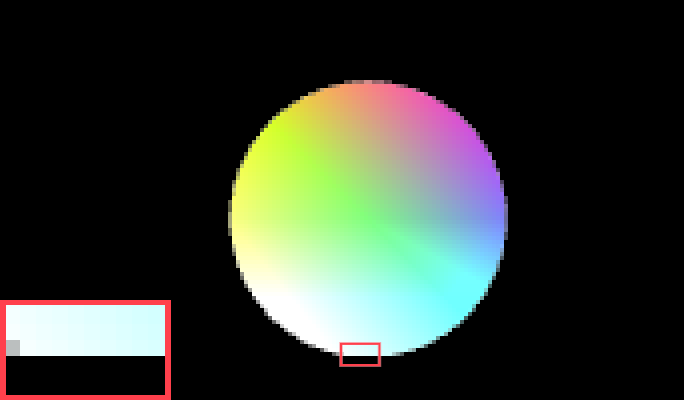

Screenshot, in case WebGL doesn't work

WebGL FXAA Shader post-FXAA.fs

precision mediump float; uniform sampler2D u_texture; varying vec2 texCoord; uniform vec2 RcpFrame; /* FXAA 3.11 code, after passing through the preprocessor with settings: - FXAA PC QUALITY - FXAA_PC 1 - Default QUALITY - FXAA_QUALITY_PRESET 12 - Optimizations disabled for WebGL 1 - FXAA_GLSL_120 1 - FXAA_FAST_PIXEL_OFFSET 0 - Further optimizations possible with WebGL 2 or by enabling extension GL_EXT_shader_texture_lod - GREEN_AS_LUMA is disabled - FXAA_GREEN_AS_LUMA 0 - Input must be RGBL */ float FxaaLuma(vec4 rgba) { return rgba.w; } vec4 FxaaPixelShader( vec2 pos, sampler2D tex, vec2 fxaaQualityRcpFrame, float fxaaQualitySubpix, float fxaaQualityEdgeThreshold, float fxaaQualityEdgeThresholdMin) { vec2 posM; posM.x = pos.x; posM.y = pos.y; vec4 rgbyM = texture2D(tex, posM); float lumaS = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(0, 1)) * fxaaQualityRcpFrame.xy))); float lumaE = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(1, 0)) * fxaaQualityRcpFrame.xy))); float lumaN = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(0, -1)) * fxaaQualityRcpFrame.xy))); float lumaW = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(-1, 0)) * fxaaQualityRcpFrame.xy))); float maxSM = max(lumaS, rgbyM.w); float minSM = min(lumaS, rgbyM.w); float maxESM = max(lumaE, maxSM); float minESM = min(lumaE, minSM); float maxWN = max(lumaN, lumaW); float minWN = min(lumaN, lumaW); float rangeMax = max(maxWN, maxESM); float rangeMin = min(minWN, minESM); float rangeMaxScaled = rangeMax * fxaaQualityEdgeThreshold; float range = rangeMax - rangeMin; float rangeMaxClamped = max(fxaaQualityEdgeThresholdMin, rangeMaxScaled); bool earlyExit = range < rangeMaxClamped; if (earlyExit) return rgbyM; float lumaNW = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(-1, -1)) * fxaaQualityRcpFrame.xy))); float lumaSE = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(1, 1)) * fxaaQualityRcpFrame.xy))); float lumaNE = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(1, -1)) * fxaaQualityRcpFrame.xy))); float lumaSW = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(-1, 1)) * fxaaQualityRcpFrame.xy))); float lumaNS = lumaN + lumaS; float lumaWE = lumaW + lumaE; float subpixRcpRange = 1.0 / range; float subpixNSWE = lumaNS + lumaWE; float edgeHorz1 = (-2.0 * rgbyM.w) + lumaNS; float edgeVert1 = (-2.0 * rgbyM.w) + lumaWE; float lumaNESE = lumaNE + lumaSE; float lumaNWNE = lumaNW + lumaNE; float edgeHorz2 = (-2.0 * lumaE) + lumaNESE; float edgeVert2 = (-2.0 * lumaN) + lumaNWNE; float lumaNWSW = lumaNW + lumaSW; float lumaSWSE = lumaSW + lumaSE; float edgeHorz4 = (abs(edgeHorz1) * 2.0) + abs(edgeHorz2); float edgeVert4 = (abs(edgeVert1) * 2.0) + abs(edgeVert2); float edgeHorz3 = (-2.0 * lumaW) + lumaNWSW; float edgeVert3 = (-2.0 * lumaS) + lumaSWSE; float edgeHorz = abs(edgeHorz3) + edgeHorz4; float edgeVert = abs(edgeVert3) + edgeVert4; float subpixNWSWNESE = lumaNWSW + lumaNESE; float lengthSign = fxaaQualityRcpFrame.x; bool horzSpan = edgeHorz >= edgeVert; float subpixA = subpixNSWE * 2.0 + subpixNWSWNESE; if (!horzSpan) lumaN = lumaW; if (!horzSpan) lumaS = lumaE; if (horzSpan) lengthSign = fxaaQualityRcpFrame.y; float subpixB = (subpixA * (1.0 / 12.0)) - rgbyM.w; float gradientN = lumaN - rgbyM.w; float gradientS = lumaS - rgbyM.w; float lumaNN = lumaN + rgbyM.w; float lumaSS = lumaS + rgbyM.w; bool pairN = abs(gradientN) >= abs(gradientS); float gradient = max(abs(gradientN), abs(gradientS)); if (pairN) lengthSign = -lengthSign; float subpixC = clamp(abs(subpixB) * subpixRcpRange, 0.0, 1.0); vec2 posB; posB.x = posM.x; posB.y = posM.y; vec2 offNP; offNP.x = (!horzSpan) ? 0.0 : fxaaQualityRcpFrame.x; offNP.y = (horzSpan) ? 0.0 : fxaaQualityRcpFrame.y; if (!horzSpan) posB.x += lengthSign * 0.5; if (horzSpan) posB.y += lengthSign * 0.5; vec2 posN; posN.x = posB.x - offNP.x * 1.0; posN.y = posB.y - offNP.y * 1.0; vec2 posP; posP.x = posB.x + offNP.x * 1.0; posP.y = posB.y + offNP.y * 1.0; float subpixD = ((-2.0) * subpixC) + 3.0; float lumaEndN = FxaaLuma(texture2D(tex, posN)); float subpixE = subpixC * subpixC; float lumaEndP = FxaaLuma(texture2D(tex, posP)); if (!pairN) lumaNN = lumaSS; float gradientScaled = gradient * 1.0 / 4.0; float lumaMM = rgbyM.w - lumaNN * 0.5; float subpixF = subpixD * subpixE; bool lumaMLTZero = lumaMM < 0.0; lumaEndN -= lumaNN * 0.5; lumaEndP -= lumaNN * 0.5; bool doneN = abs(lumaEndN) >= gradientScaled; bool doneP = abs(lumaEndP) >= gradientScaled; if (!doneN) posN.x -= offNP.x * 1.5; if (!doneN) posN.y -= offNP.y * 1.5; bool doneNP = (!doneN) || (!doneP); if (!doneP) posP.x += offNP.x * 1.5; if (!doneP) posP.y += offNP.y * 1.5; if (doneNP) { if (!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if (!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if (!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if (!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if (!doneN) posN.x -= offNP.x * 2.0; if (!doneN) posN.y -= offNP.y * 2.0; doneNP = (!doneN) || (!doneP); if (!doneP) posP.x += offNP.x * 2.0; if (!doneP) posP.y += offNP.y * 2.0; if (doneNP) { if (!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if (!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if (!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if (!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if (!doneN) posN.x -= offNP.x * 4.0; if (!doneN) posN.y -= offNP.y * 4.0; doneNP = (!doneN) || (!doneP); if (!doneP) posP.x += offNP.x * 4.0; if (!doneP) posP.y += offNP.y * 4.0; if (doneNP) { if (!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if (!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if (!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if (!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if (!doneN) posN.x -= offNP.x * 12.0; if (!doneN) posN.y -= offNP.y * 12.0; doneNP = (!doneN) || (!doneP); if (!doneP) posP.x += offNP.x * 12.0; if (!doneP) posP.y += offNP.y * 12.0; } } } float dstN = posM.x - posN.x; float dstP = posP.x - posM.x; if (!horzSpan) dstN = posM.y - posN.y; if (!horzSpan) dstP = posP.y - posM.y; bool goodSpanN = (lumaEndN < 0.0) != lumaMLTZero; float spanLength = (dstP + dstN); bool goodSpanP = (lumaEndP < 0.0) != lumaMLTZero; float spanLengthRcp = 1.0 / spanLength; bool directionN = dstN < dstP; float dst = min(dstN, dstP); bool goodSpan = directionN ? goodSpanN : goodSpanP; float subpixG = subpixF * subpixF; float pixelOffset = (dst * (-spanLengthRcp)) + 0.5; float subpixH = subpixG * fxaaQualitySubpix; float pixelOffsetGood = goodSpan ? pixelOffset : 0.0; float pixelOffsetSubpix = max(pixelOffsetGood, subpixH); if (!horzSpan) posM.x += pixelOffsetSubpix * lengthSign; if (horzSpan) posM.y += pixelOffsetSubpix * lengthSign; return vec4(texture2D(tex, posM).xyz, rgbyM.w); } void main() { gl_FragColor = FxaaPixelShader( texCoord, u_texture, RcpFrame, 0.75, 0.166, 0.0833); }WebGL Javascript circleFXAA.js

function setupFXAA(canvasId, circleVtxSrc, circleFragSrc, postVtxSrc, postFragSrc, blitVtxSrc, blitFragSrc, redVtxSrc, redFragSrc, radioName) { /* Init */ const canvas = document.getElementById(canvasId); let frameTexture, circleDrawFramebuffer, frameTextureLinear; let buffersInitialized = false; let resDiv = 1; const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false, antialias: false, alpha: true, premultipliedAlpha: true } ); /* Setup Possibilities */ let samples = 1; let renderbuffer = null; let resolveFramebuffer = null; /* Render Resolution */ const radios = document.querySelectorAll(`input[name="${radioName}"]`); radios.forEach(radio => { /* Force set to 1 to fix a reload bug in Firefox Android */ if (radio.value === "1") radio.checked = true; radio.addEventListener('change', (event) => { resDiv = event.target.value; stopRendering(); startRendering(); }); }); /* Shaders */ /* Circle Shader */ const circleShd = compileAndLinkShader(gl, circleVtxSrc, circleFragSrc); const aspect_ratioLocation = gl.getUniformLocation(circleShd, "aspect_ratio"); const offsetLocationCircle = gl.getUniformLocation(circleShd, "offset"); const sizeLocationCircle = gl.getUniformLocation(circleShd, "size"); /* Blit Shader */ const blitShd = compileAndLinkShader(gl, blitVtxSrc, blitFragSrc); const transformLocation = gl.getUniformLocation(blitShd, "transform"); const offsetLocationPost = gl.getUniformLocation(blitShd, "offset"); /* Post Shader */ const postShd = compileAndLinkShader(gl, postVtxSrc, postFragSrc); const rcpFrameLocation = gl.getUniformLocation(postShd, "RcpFrame"); /* Simple Red Box */ const redShd = compileAndLinkShader(gl, redVtxSrc, redFragSrc); const transformLocationRed = gl.getUniformLocation(redShd, "transform"); const offsetLocationRed = gl.getUniformLocation(redShd, "offset"); const aspect_ratioLocationRed = gl.getUniformLocation(redShd, "aspect_ratio"); const thicknessLocation = gl.getUniformLocation(redShd, "thickness"); const pixelsizeLocation = gl.getUniformLocation(redShd, "pixelsize"); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, unitQuad, gl.STATIC_DRAW); gl.vertexAttribPointer(0, 2, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 0); gl.vertexAttribPointer(1, 3, gl.FLOAT, false, 5 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); gl.enableVertexAttribArray(0); gl.enableVertexAttribArray(1); setupTextureBuffers(); const circleOffsetAnim = new Float32Array([ 0.0, 0.0 ]); let aspect_ratio = 0; let last_time = 0; let redrawActive = false; let animationFrameId; gl.enable(gl.BLEND); function setupTextureBuffers() { gl.deleteFramebuffer(resolveFramebuffer); resolveFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, resolveFramebuffer); frameTexture = setupTexture(gl, canvas.width / resDiv, canvas.height / resDiv, frameTexture, gl.NEAREST); gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, frameTexture, 0); gl.deleteFramebuffer(circleDrawFramebuffer); circleDrawFramebuffer = gl.createFramebuffer(); gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); frameTextureLinear = setupTexture(gl, canvas.width / resDiv, canvas.height / resDiv, frameTextureLinear, gl.LINEAR); gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, frameTextureLinear, 0); buffersInitialized = true; } function redraw(time) { redrawActive = true; if (!buffersInitialized) { setupTextureBuffers(); } last_time = time; gl.viewport(0, 0, canvas.width / resDiv, canvas.height / resDiv); /* Setup PostProcess Framebuffer */ gl.bindFramebuffer(gl.FRAMEBUFFER, circleDrawFramebuffer); gl.clear(gl.COLOR_BUFFER_BIT); gl.useProgram(circleShd); /* Draw Circle Animation */ gl.uniform1f(aspect_ratioLocation, aspect_ratio); var radius = 0.1; var speed = (time / 10000) % Math.PI * 2; circleOffsetAnim[0] = radius * Math.cos(speed) + 0.1; circleOffsetAnim[1] = radius * Math.sin(speed); gl.uniform2fv(offsetLocationCircle, circleOffsetAnim); gl.uniform1f(sizeLocationCircle, circleSize); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.useProgram(postShd); gl.uniform2f(rcpFrameLocation, 1.0 / (canvas.width / resDiv), 1.0 / (canvas.height / resDiv)); gl.disable(gl.SAMPLE_ALPHA_TO_COVERAGE); gl.blendFunc(gl.ONE, gl.ONE_MINUS_SRC_ALPHA); gl.bindTexture(gl.TEXTURE_2D, frameTextureLinear); gl.bindFramebuffer(gl.FRAMEBUFFER, resolveFramebuffer); gl.clear(gl.COLOR_BUFFER_BIT); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.viewport(0, 0, canvas.width, canvas.height); gl.useProgram(blitShd); gl.bindFramebuffer(gl.FRAMEBUFFER, null); gl.bindTexture(gl.TEXTURE_2D, frameTexture); /* Simple Passthrough */ gl.uniform4f(transformLocation, 1.0, 1.0, 0.0, 0.0); gl.uniform2f(offsetLocationPost, 0.0, 0.0); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Scaled image in the bottom left */ gl.uniform4f(transformLocation, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationPost, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); /* Draw Red box for viewport illustration */ gl.blendFunc(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA); gl.useProgram(redShd); gl.uniform1f(aspect_ratioLocationRed, (1.0 / aspect_ratio) - 1.0); gl.uniform1f(thicknessLocation, 0.2); gl.uniform1f(pixelsizeLocation, (1.0 / canvas.width) * 50); gl.uniform4f(transformLocationRed, 0.25, 0.25, -0.75, -0.75); gl.uniform2fv(offsetLocationRed, circleOffsetAnim); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); gl.uniform1f(thicknessLocation, 0.1); gl.uniform1f(pixelsizeLocation, 0.0); gl.uniform4f(transformLocationRed, 0.5, 0.5, 0.0, 0.0); gl.uniform2f(offsetLocationRed, -0.75, -0.75); gl.drawArrays(gl.TRIANGLE_FAN, 0, 4); redrawActive = false; } function onResize() { const dipRect = canvas.getBoundingClientRect(); const width = Math.round(devicePixelRatio * dipRect.right) - Math.round(devicePixelRatio * dipRect.left); const height = Math.round(devicePixelRatio * dipRect.bottom) - Math.round(devicePixelRatio * dipRect.top); if (canvas.width !== width || canvas.height !== height) { canvas.width = width; canvas.height = height; setupTextureBuffers(); aspect_ratio = 1.0 / (width / height); } } window.addEventListener('resize', onResize, true); onResize(); let isRendering = false; function renderLoop(time) { if (isRendering) { redraw(time); animationFrameId = requestAnimationFrame(renderLoop); } } function startRendering() { /* Start rendering, when canvas visible */ isRendering = true; renderLoop(last_time); } function stopRendering() { /* Stop another redraw being called */ isRendering = false; cancelAnimationFrame(animationFrameId); while (redrawActive) { /* Spin on draw calls being processed. To simplify sync. In reality this code is block is never reached, but just in case, we have this here. */ } /* Force the rendering pipeline to sync with CPU before we mess with it */ gl.finish(); /* Delete the important buffer to free up memory */ gl.deleteTexture(frameTexture); gl.deleteFramebuffer(circleDrawFramebuffer); gl.deleteRenderbuffer(renderbuffer); gl.deleteFramebuffer(resolveFramebuffer); buffersInitialized = false; } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) startRendering(); } else { stopRendering(); } }); } /* Only render when the canvas is actually on screen */ let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); }

A bit of a weird result. It looks good if the circle wouldn’t move. Perfectly smooth edges. But the circle distorts as it moves. The axis-aligned top and bottom especially have a little nub that appears and disappears. And switching to lower resolutions, the circle even loses its round shape, wobbling like Play Station 1 graphics.

Per-pixel, FXAA considers only the 3x3 neighborhood, so it can’t possibly know that this area is part of a big shape. But it also doesn’t just “blur edges”, as often said. As explained in the official whitepaper, it finds the edge’s direction and shifts the pixel’s coordinates to let the performance free bilinear filtering do the blending.

For our demo here, wrong tool for the job. Really, we didn’t do FXAA justice with our example. FXAA was created for another use case and has many settings and presets. It was created to anti-alias more complex scenes. Let’s give it a fair shot!

FXAA full demo #

A scene from my favorite piece of software in existence: NeoTokyo°. I created a bright area light in an NT° map and moved a bench to create an area of strong aliasing. The following demo uses the aliased output from NeoTokyo°, calculates the required luminance channel and applies FXAA. All FXAA presets and settings at your finger tips.

This has fixed resolution and will only be at you device's native resolution, if your device has no dpi scaling and the browser is at 100% zoom.

FXAA_QUALITY_PRESET | |||

FXAA_QUALITY_PRESET | |||

fxaaQualitySubpix | |||

fxaaQualitySubpix | |||

fxaaQualityEdgeThreshold | |||

fxaaQualityEdgeThreshold | |||

fxaaQualityEdgeThresholdMin | |||

fxaaQualityEdgeThresholdMin | |||

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader FXAA-interactive.vs

/* Our Vertex data for the Quad */ attribute vec2 vtx; varying vec2 uv; void main() { /* FXAA expects flipped, DirectX style UV coordinates */ uv = vtx * vec2(0.5, -0.5) + 0.5; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader FXAA-interactive.fs

precision highp float; varying vec2 uv; uniform sampler2D texture; uniform vec2 RcpFrame; uniform float u_fxaaQualitySubpix; uniform float u_fxaaQualityEdgeThreshold; uniform float u_fxaaQualityEdgeThresholdMin; /*============================================================================ FXAA QUALITY - PRESETS ============================================================================*/ /*============================================================================ FXAA QUALITY - MEDIUM DITHER PRESETS ============================================================================*/ #if (FXAA_QUALITY_PRESET == 10) #define FXAA_QUALITY_PS 3 #define FXAA_QUALITY_P0 1.5 #define FXAA_QUALITY_P1 3.0 #define FXAA_QUALITY_P2 12.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 11) #define FXAA_QUALITY_PS 4 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 3.0 #define FXAA_QUALITY_P3 12.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 12) #define FXAA_QUALITY_PS 5 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 4.0 #define FXAA_QUALITY_P4 12.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 13) #define FXAA_QUALITY_PS 6 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 4.0 #define FXAA_QUALITY_P5 12.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 14) #define FXAA_QUALITY_PS 7 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 4.0 #define FXAA_QUALITY_P6 12.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 15) #define FXAA_QUALITY_PS 8 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 2.0 #define FXAA_QUALITY_P6 4.0 #define FXAA_QUALITY_P7 12.0 #endif /*============================================================================ FXAA QUALITY - LOW DITHER PRESETS ============================================================================*/ #if (FXAA_QUALITY_PRESET == 20) #define FXAA_QUALITY_PS 3 #define FXAA_QUALITY_P0 1.5 #define FXAA_QUALITY_P1 2.0 #define FXAA_QUALITY_P2 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 21) #define FXAA_QUALITY_PS 4 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 22) #define FXAA_QUALITY_PS 5 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 23) #define FXAA_QUALITY_PS 6 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 24) #define FXAA_QUALITY_PS 7 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 3.0 #define FXAA_QUALITY_P6 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 25) #define FXAA_QUALITY_PS 8 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 2.0 #define FXAA_QUALITY_P6 4.0 #define FXAA_QUALITY_P7 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 26) #define FXAA_QUALITY_PS 9 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 2.0 #define FXAA_QUALITY_P6 2.0 #define FXAA_QUALITY_P7 4.0 #define FXAA_QUALITY_P8 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 27) #define FXAA_QUALITY_PS 10 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 2.0 #define FXAA_QUALITY_P6 2.0 #define FXAA_QUALITY_P7 2.0 #define FXAA_QUALITY_P8 4.0 #define FXAA_QUALITY_P9 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 28) #define FXAA_QUALITY_PS 11 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 2.0 #define FXAA_QUALITY_P6 2.0 #define FXAA_QUALITY_P7 2.0 #define FXAA_QUALITY_P8 2.0 #define FXAA_QUALITY_P9 4.0 #define FXAA_QUALITY_P10 8.0 #endif /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PRESET == 29) #define FXAA_QUALITY_PS 12 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.5 #define FXAA_QUALITY_P2 2.0 #define FXAA_QUALITY_P3 2.0 #define FXAA_QUALITY_P4 2.0 #define FXAA_QUALITY_P5 2.0 #define FXAA_QUALITY_P6 2.0 #define FXAA_QUALITY_P7 2.0 #define FXAA_QUALITY_P8 2.0 #define FXAA_QUALITY_P9 2.0 #define FXAA_QUALITY_P10 4.0 #define FXAA_QUALITY_P11 8.0 #endif /*============================================================================ FXAA QUALITY - EXTREME QUALITY ============================================================================*/ #if (FXAA_QUALITY_PRESET == 39) #define FXAA_QUALITY_PS 12 #define FXAA_QUALITY_P0 1.0 #define FXAA_QUALITY_P1 1.0 #define FXAA_QUALITY_P2 1.0 #define FXAA_QUALITY_P3 1.0 #define FXAA_QUALITY_P4 1.0 #define FXAA_QUALITY_P5 1.5 #define FXAA_QUALITY_P6 2.0 #define FXAA_QUALITY_P7 2.0 #define FXAA_QUALITY_P8 2.0 #define FXAA_QUALITY_P9 2.0 #define FXAA_QUALITY_P10 4.0 #define FXAA_QUALITY_P11 8.0 #endif /*============================================================================ GREEN AS LUMA OPTION SUPPORT FUNCTION ============================================================================*/ #if (FXAA_GREEN_AS_LUMA == 0) float FxaaLuma(vec4 rgba) { return rgba.w; } #else float FxaaLuma(vec4 rgba) { return rgba.y; } #endif /*============================================================================ FXAA3 QUALITY - PC ============================================================================*/ vec4 FxaaPixelShader( // // Use noperspective interpolation here (turn off perspective interpolation). // {xy} = center of pixel vec2 pos, // // Input color texture. // {rgb_} = color in linear or perceptual color space // if (FXAA_GREEN_AS_LUMA == 0) // {__a} = luma in perceptual color space (not linear) sampler2D tex, // // Only used on FXAA Quality. // This must be from a constant/uniform. // {x_} = 1.0/screenWidthInPixels // {_y} = 1.0/screenHeightInPixels vec2 fxaaQualityRcpFrame, // // Only used on FXAA Quality. // This used to be the FXAA_QUALITY_SUBPIX define. // It is here now to allow easier tuning. // Choose the amount of sub-pixel aliasing removal. // This can effect sharpness. // 1.00 - upper limit (softer) // 0.75 - default amount of filtering // 0.50 - lower limit (sharper, less sub-pixel aliasing removal) // 0.25 - almost off // 0.00 - completely off float fxaaQualitySubpix, // // Only used on FXAA Quality. // This used to be the FXAA_QUALITY_EDGE_THRESHOLD define. // It is here now to allow easier tuning. // The minimum amount of local contrast required to apply algorithm. // 0.333 - too little (faster) // 0.250 - low quality // 0.166 - default // 0.125 - high quality // 0.063 - overkill (slower) float fxaaQualityEdgeThreshold, // // Only used on FXAA Quality. // This used to be the FXAA_QUALITY_EDGE_THRESHOLD_MIN define. // It is here now to allow easier tuning. // Trims the algorithm from processing darks. // 0.0833 - upper limit (default, the start of visible unfiltered edges) // 0.0625 - high quality (faster) // 0.0312 - visible limit (slower) // Special notes when using FXAA_GREEN_AS_LUMA, // Likely want to set this to zero. // As colors that are mostly not-green // will appear very dark in the green channel! // Tune by looking at mostly non-green content, // then start at zero and increase until aliasing is a problem. float fxaaQualityEdgeThresholdMin ) { /*--------------------------------------------------------------------------*/ vec2 posM; posM.x = pos.x; posM.y = pos.y; vec4 rgbyM = texture2D(tex, posM); #if (FXAA_GREEN_AS_LUMA == 0) #define lumaM rgbyM.w #else #define lumaM rgbyM.y #endif float lumaS = FxaaLuma(texture2D(tex, posM + (vec2(ivec2( 0, 1)) * fxaaQualityRcpFrame.xy))); float lumaE = FxaaLuma(texture2D(tex, posM + (vec2(ivec2( 1, 0)) * fxaaQualityRcpFrame.xy))); float lumaN = FxaaLuma(texture2D(tex, posM + (vec2(ivec2( 0,-1)) * fxaaQualityRcpFrame.xy))); float lumaW = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(-1, 0)) * fxaaQualityRcpFrame.xy))); /*--------------------------------------------------------------------------*/ float maxSM = max(lumaS, lumaM); float minSM = min(lumaS, lumaM); float maxESM = max(lumaE, maxSM); float minESM = min(lumaE, minSM); float maxWN = max(lumaN, lumaW); float minWN = min(lumaN, lumaW); float rangeMax = max(maxWN, maxESM); float rangeMin = min(minWN, minESM); float rangeMaxScaled = rangeMax * fxaaQualityEdgeThreshold; float range = rangeMax - rangeMin; float rangeMaxClamped = max(fxaaQualityEdgeThresholdMin, rangeMaxScaled); bool earlyExit = range < rangeMaxClamped; /*--------------------------------------------------------------------------*/ if(earlyExit) return rgbyM; /*--------------------------------------------------------------------------*/ float lumaNW = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(-1,-1)) * fxaaQualityRcpFrame.xy))); float lumaSE = FxaaLuma(texture2D(tex, posM + (vec2(ivec2( 1, 1)) * fxaaQualityRcpFrame.xy))); float lumaNE = FxaaLuma(texture2D(tex, posM + (vec2(ivec2( 1,-1)) * fxaaQualityRcpFrame.xy))); float lumaSW = FxaaLuma(texture2D(tex, posM + (vec2(ivec2(-1, 1)) * fxaaQualityRcpFrame.xy))); /*--------------------------------------------------------------------------*/ float lumaNS = lumaN + lumaS; float lumaWE = lumaW + lumaE; float subpixRcpRange = 1.0/range; float subpixNSWE = lumaNS + lumaWE; float edgeHorz1 = (-2.0 * lumaM) + lumaNS; float edgeVert1 = (-2.0 * lumaM) + lumaWE; /*--------------------------------------------------------------------------*/ float lumaNESE = lumaNE + lumaSE; float lumaNWNE = lumaNW + lumaNE; float edgeHorz2 = (-2.0 * lumaE) + lumaNESE; float edgeVert2 = (-2.0 * lumaN) + lumaNWNE; /*--------------------------------------------------------------------------*/ float lumaNWSW = lumaNW + lumaSW; float lumaSWSE = lumaSW + lumaSE; float edgeHorz4 = (abs(edgeHorz1) * 2.0) + abs(edgeHorz2); float edgeVert4 = (abs(edgeVert1) * 2.0) + abs(edgeVert2); float edgeHorz3 = (-2.0 * lumaW) + lumaNWSW; float edgeVert3 = (-2.0 * lumaS) + lumaSWSE; float edgeHorz = abs(edgeHorz3) + edgeHorz4; float edgeVert = abs(edgeVert3) + edgeVert4; /*--------------------------------------------------------------------------*/ float subpixNWSWNESE = lumaNWSW + lumaNESE; float lengthSign = fxaaQualityRcpFrame.x; bool horzSpan = edgeHorz >= edgeVert; float subpixA = subpixNSWE * 2.0 + subpixNWSWNESE; /*--------------------------------------------------------------------------*/ if(!horzSpan) lumaN = lumaW; if(!horzSpan) lumaS = lumaE; if(horzSpan) lengthSign = fxaaQualityRcpFrame.y; float subpixB = (subpixA * (1.0/12.0)) - lumaM; /*--------------------------------------------------------------------------*/ float gradientN = lumaN - lumaM; float gradientS = lumaS - lumaM; float lumaNN = lumaN + lumaM; float lumaSS = lumaS + lumaM; bool pairN = abs(gradientN) >= abs(gradientS); float gradient = max(abs(gradientN), abs(gradientS)); if(pairN) lengthSign = -lengthSign; float subpixC = clamp(abs(subpixB) * subpixRcpRange, 0.0, 1.0); /*--------------------------------------------------------------------------*/ vec2 posB; posB.x = posM.x; posB.y = posM.y; vec2 offNP; offNP.x = (!horzSpan) ? 0.0 : fxaaQualityRcpFrame.x; offNP.y = ( horzSpan) ? 0.0 : fxaaQualityRcpFrame.y; if(!horzSpan) posB.x += lengthSign * 0.5; if( horzSpan) posB.y += lengthSign * 0.5; /*--------------------------------------------------------------------------*/ vec2 posN; posN.x = posB.x - offNP.x * FXAA_QUALITY_P0; posN.y = posB.y - offNP.y * FXAA_QUALITY_P0; vec2 posP; posP.x = posB.x + offNP.x * FXAA_QUALITY_P0; posP.y = posB.y + offNP.y * FXAA_QUALITY_P0; float subpixD = ((-2.0)*subpixC) + 3.0; float lumaEndN = FxaaLuma(texture2D(tex, posN)); float subpixE = subpixC * subpixC; float lumaEndP = FxaaLuma(texture2D(tex, posP)); /*--------------------------------------------------------------------------*/ if(!pairN) lumaNN = lumaSS; float gradientScaled = gradient * 1.0/4.0; float lumaMM = lumaM - lumaNN * 0.5; float subpixF = subpixD * subpixE; bool lumaMLTZero = lumaMM < 0.0; /*--------------------------------------------------------------------------*/ lumaEndN -= lumaNN * 0.5; lumaEndP -= lumaNN * 0.5; bool doneN = abs(lumaEndN) >= gradientScaled; bool doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P1; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P1; bool doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P1; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P1; /*--------------------------------------------------------------------------*/ if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P2; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P2; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P2; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P2; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 3) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P3; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P3; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P3; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P3; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 4) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P4; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P4; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P4; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P4; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 5) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P5; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P5; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P5; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P5; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 6) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P6; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P6; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P6; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P6; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 7) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P7; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P7; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P7; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P7; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 8) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P8; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P8; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P8; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P8; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 9) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P9; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P9; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P9; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P9; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 10) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P10; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P10; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P10; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P10; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 11) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P11; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P11; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P11; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P11; /*--------------------------------------------------------------------------*/ #if (FXAA_QUALITY_PS > 12) if(doneNP) { if(!doneN) lumaEndN = FxaaLuma(texture2D(tex, posN.xy)); if(!doneP) lumaEndP = FxaaLuma(texture2D(tex, posP.xy)); if(!doneN) lumaEndN = lumaEndN - lumaNN * 0.5; if(!doneP) lumaEndP = lumaEndP - lumaNN * 0.5; doneN = abs(lumaEndN) >= gradientScaled; doneP = abs(lumaEndP) >= gradientScaled; if(!doneN) posN.x -= offNP.x * FXAA_QUALITY_P12; if(!doneN) posN.y -= offNP.y * FXAA_QUALITY_P12; doneNP = (!doneN) || (!doneP); if(!doneP) posP.x += offNP.x * FXAA_QUALITY_P12; if(!doneP) posP.y += offNP.y * FXAA_QUALITY_P12; /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } #endif /*--------------------------------------------------------------------------*/ } /*--------------------------------------------------------------------------*/ float dstN = posM.x - posN.x; float dstP = posP.x - posM.x; if(!horzSpan) dstN = posM.y - posN.y; if(!horzSpan) dstP = posP.y - posM.y; /*--------------------------------------------------------------------------*/ bool goodSpanN = (lumaEndN < 0.0) != lumaMLTZero; float spanLength = (dstP + dstN); bool goodSpanP = (lumaEndP < 0.0) != lumaMLTZero; float spanLengthRcp = 1.0/spanLength; /*--------------------------------------------------------------------------*/ bool directionN = dstN < dstP; float dst = min(dstN, dstP); bool goodSpan = directionN ? goodSpanN : goodSpanP; float subpixG = subpixF * subpixF; float pixelOffset = (dst * (-spanLengthRcp)) + 0.5; float subpixH = subpixG * fxaaQualitySubpix; /*--------------------------------------------------------------------------*/ float pixelOffsetGood = goodSpan ? pixelOffset : 0.0; float pixelOffsetSubpix = max(pixelOffsetGood, subpixH); if(!horzSpan) posM.x += pixelOffsetSubpix * lengthSign; if( horzSpan) posM.y += pixelOffsetSubpix * lengthSign; return vec4(texture2D(tex, posM).xyz, lumaM); } void main(void) { #if (FXAA_LUMA) #if (FXAA_GREEN_AS_LUMA) gl_FragColor = vec4(texture2D(texture, uv).ggg, 1.0); #else gl_FragColor = vec4(texture2D(texture, uv).aaa, 1.0); #endif #elif (FXAA_ENABLE) gl_FragColor = FxaaPixelShader( uv, texture, RcpFrame, u_fxaaQualitySubpix, u_fxaaQualityEdgeThreshold, u_fxaaQualityEdgeThresholdMin); #else gl_FragColor = vec4(texture2D(texture, uv).rgb, 1.0); #endif }WebGL Javascript FXAA-interactive.js