Look-up-tables, more commonly referred to as LUTs, are as old as Mathematics itself. The act of precalculating things into a row or table is nothing new. But in the realm of graphics programming, this simple act unlocks some incredibly creative techniques, which both artists and programmers found when faced with tough technical hurdles.

We’ll embark on a small journey, which will take us from simple things like turning grayscale footage into color, to creating limitless variations of blood-lusting zombies, with many interactive WebGL examples along the way, that you can try out with your own videos or webcam. Though this article uses WebGL, the techniques shown apply to any other graphics programming context, be it DirectX, OpenGL, Vulkan, game engines like Unity, or plain scientific data visualization.

We’ll be creating and modifying the video above, though you may substitute the footage with your own at any point in the article. The video is a capture of two cameras, a Panasonic GH6 and a TESTO 890 thermal camera. I’m eating cold ice cream and drinking hot tea to stretch the temperatures on display.

The Setup #

We’ll first start with the thermal camera footage. The output of the thermal camera is a grayscale video. Instead of this video, you may upload your own or activate the webcam, which even allows you to live stream from a thermal camera using OBS’s virtual webcam output and various input methods.

No data leaves your device, all processing happens on your GPU. Feel free to use videos exposing your most intimate secrets.

Don't pause the video, it's the live input for the WebGL examples below

Next we upload this footage to the graphics card using WebGL and redisplay it using a shader, which leaves the footage untouched. Each frame is transferred as a 2D texture to the GPU. Though we haven’t actually done anything visually yet, we have established a graphics pipeline, which allows us to manipulate the video data in realtime. From here on out, we are mainly interested in the “Fragment Shader”. This is the piece of code that runs per pixel of the video to determine its final color.

I'm hardcore simplifying here. Technically there are many shader stages, the fragment shader runs per fragment of the output resolution not per pixel of the input, etc.

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-simple.fs

/* In WebGL 1.0, having no #version implies #version 100 */ /* In WebGL we have to set the float precision. On some devices it doesn't change anything. For color manipulation, having mediump is ample. For precision trigonometry, bumping to highp is often needed. */ precision mediump float; /* Our texture coordinates */ varying vec2 tex; /* Our video texture */ uniform sampler2D video; void main(void) { /* The texture read, also called "Texture Tap" with the coordinate for the current pixel. */ vec3 videoColor = texture2D(video, tex).rgb; /* Our final color. In WebGL 1.0 this output is always RGBA and always named "gl_FragColor" */ gl_FragColor = vec4(videoColor, 1.0); }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

Both the video and its WebGL rendition should be identical and playing in sync.

Unless you are on Firefox Android, where video is broken for WebGL

Tinting #

Before we jump into how LUTs can help us, let’s take a look a how we can manipulate this footage. The Fragment Shader below colors the image orange by multiplying the image with the color orange in line 21. Coloring a texture that way is referred to as “tinting”.

vec3 finalColor = videoColor * vec3(1.0, 0.5, 0.0); is the line that performs this transformation. vec3(1.0, 0.5, 0.0) is the color orange in RGB. Try changing this line and clicking “Reload Shader” to get a feel for how this works. Also try out different operations, like addition +, division / etc.

/* In WebGL 1.0, having no #version implies #version 100 */

/* In WebGL we have to set the float precision. On some devices it doesn't

change anything. For color manipulation, having mediump is ample. For

precision trigonometry, bumping to highp is often needed. */

precision mediump float;

/* Our texture coordinates */

varying vec2 tex;

/* Our video texture */

uniform sampler2D video;

void main(void)

{

/* The texture read, also called "Texture Tap" with the coordinate for

the current pixel. */

vec3 videoColor = texture2D(video, tex).rgb;

/* Here is where the tinting happens. We multiply with (1.0, 0.5, 0.0),

which is orange (100% Red, 50% Green, 0% Blue). White becomes Orange,

since muplitplying 1 with X gives you X. Black stays black, since 0

times X is 0. Try out different things! */

vec3 finalColor = videoColor * vec3(1.0, 0.5, 0.0);

/* Our final color. In WebGL 1.0 this output is always RGBA and always

named "gl_FragColor" */

gl_FragColor = vec4(finalColor, 1.0);

}Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

Performance cost: Zero #

Depending on the context, the multiplication introduced by the tinting has zero performance impact. On a theoretical level, the multiplication has a cost associated with it, since the chip has to perform this multiplication at some point. But you will probably not be able to measure it in this context, as the multiplication is affected by “latency hiding”. The act, cost and latency of pushing the video though the graphics pipeline unlocks a lot of manipulations we get for free this way. We can rationalize this from multiple levels, but the main point goes like:

- Fetching the texture from memory takes way more time than a multiplication

- Even though the result depends on the texture tap, with multiple threads the multiplication is performed while waiting on the texture tap of another pixel

This is about the difference tinting makes, not overall performance. Lot's left on the optimization table, like asynchronously loading the frames to a single-channel texture or processing on every frame, not display refresh

In similar vein, it was also talked about in the recent blog post by Alyssa Rosenzweig, about her GPU reverse engineering project achieving proper standard conformant OpenGL Drivers on the Apple M1. Regarding performance implications of a specific additional operation she noted:

Alyssa Rosenzweig: The difference should be small percentage-wise, as arithmetic is faster than memory. With thousands of threads running in parallel, the arithmetic cost may even be hidden by the load’s latency.

Valve Software’s use of tinting #

Let’s take a look how this is used in the wild. As an example, we have Valve Software’s Left 4 Dead. The in-game developer commentary feature unlocks much shared wisdom form artists and programmers alike. Here is the audio log of developer Tristan Reidford explaining how they utilized tinting to create car variations. In particular they use one extra texture channel to determine extra tinting regions, allowing one to use 2 colors to tint certain regions of the 3D model in a different color.

Tristan Reidford: Usually each model in the game has its own unique texture maps painted specifically for that model, which give the object its surface colors and detail. To have a convincing variety of cars using this method would have required as many textures as varieties of car, plus multiple duplicates of the textures in different colors, which would have been far out of our allotted texture memory budget. So we had to find a more efficient way to bring about that same result. For example, the texture on this car is shared with 3 different car models distributed throughout the environment. In addition to this one color texture, there is also a ‘mask’ texture that allows each instance of the car’s painted surfaces to be tinted a different color, without having to author a separate texture. So for the cost of two textures you can get four different car models in an unlimited variety of colors.

Note, that it’s not just cars. Essentially everything in the Source Engine can be tinted.

The LUT - Simple, yet powerful #

Now that we have gotten an idea of how we can interact and manipulate color in a graphics programming context, let’s dive into how a LUT can elevate that. The core of the idea is this: Instead of defining how the colors are changed across their entire range, let’s define what color range changes in what way. If you have replaced the above thermal image with an RGB video of your own, then just the red channel will be used going forward.

The following examples make more sense in context of thermal camera footage, so you can click the following button to revert to it, if you wish.

The humble 1D LUT #

A 1D LUT is a simple array of numbers. If the 1D LUT is an RGB image, then a 1D LUT is a 1D array of colors. According to that array, we will color our gray video. In the context of graphics programming, this gets uploaded as a 1D-texture to the graphics card, where it is used to transform the single channel pixels into RGB.

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-lut.fs

precision mediump float; varying vec2 tex; uniform sampler2D video; uniform sampler2D lut; void main(void) { /* We'll just take the red channel .r */ float videoColor = texture2D(video, tex).r; vec4 finalColor = texture2D(lut, vec2(videoColor, 0.5)); gl_FragColor = finalColor; }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

An here comes the neat part, looking at the fragment shader, we use the brightness of the video, which goes from [0.0 - 1.0] to index into the X-Axis of our 1D LUT, which also has texture coordinates corresponding to[0.0 - 1.0], resulting in the expression vec4 finalcolor = texture(lut, videoColor);. In WebGL 1.0, we don’t have 1D-Textures, so we use a 2D-Texture of 1px height. vec4 finalColor = texture2D(lut, vec2(videoColor, 0.5)); Thus the resulting code actually needs the Y coordinate as well, neither of which particularly matters.

The 0.0 black in the video is mapped to the color on the left and 1.0 white in the video is mapped to the color on the right, with all colors in between being assigned to their corresponding values. 1D vector in, 3D vector out.

What makes this map so well to the GPU, is that on GPUs we get bilinear filtering for free when performing texture reads. So if our 8-bits per channel video has 256 distinct shades of grey, but our 1D-Lut is only 32 pixels wide, then the texture access in between two pixels gets linearly interpolated automatically. In the above selection box you can try setting the 1D Lut to different sizes and compare.

Incredible how close the 256 pixel wide and very colorful gradient is reproduced, with only 8 pixels worth of information!

So many colors #

Here is every single colormap that matplotlib supports, exported as a 1D LUT. Scroll through all of them and choose your favorite!

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-lut.fs

precision mediump float; varying vec2 tex; uniform sampler2D video; uniform sampler2D lut; void main(void) { /* We'll just take the red channel .r */ float videoColor = texture2D(video, tex).r; vec4 finalColor = texture2D(lut, vec2(videoColor, 0.5)); gl_FragColor = finalColor; }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

Sike! It’s a trick question. You don’t get to choose. You may think, that you should choose what ever looks best, but in matters of taste, the customer isn’t always right.

Unless your data has specific structure, there is actually one colormap type that you should be using or basing your color settings on - “Perceptually Uniform”, like the viridis family of colormaps. We won’t dive into such a deep topic here, but the main points are this:

- If you choose from the Perceptually Uniform ones, then printing your data in black and white will still have the “cold” parts dark and “hot” parts bright

- This is not a given with colorful options like jet, which modify mainly just the hue whilst ignoring perceived lightness

- People with color blindness will still be able to interpret your data correctly

Reasons for this and why other colormaps are dangerous for judging critical information are presented by Stefan van der Walt and Nathaniel J. Smith in this talk.

Still performance free? #

We talked about tinting being essentially performance free. When talking about (small 1D) LUTs it gets complicated, though the answer is still probably yes. The main concern comes from us creating something called a “dependant texture read”. We are triggering one texture read based on the result of another. In graphics programming, a performance sin, as we eliminate a whole class of possible optimized paths, that graphics drivers consider.

GPUs have textures caches, which our LUT will have no problem fitting into and will probably make LUT texture reads very cheap. To measure performance this finely, how caches are hit and the like, we required advanced debugging tools, which are platform specific. There is Nvidia NSight, which allows you to break down the performance of each step in the shader, though OpenGL is unsupported for this. Either way, this is not the topic of this article. There is one more thing though…

You can perform polynomial approximations of a colormap and thus side-step the LUT texture read. The next WebGL fragment shader features a polynomial approximation of viridis. It was created by Matt Zucker, available on ShaderToy including polynomials for other colormaps. Compare both the original colormap exported as a LUT and the approximation exported as a LUT in the following two stripes. Remarkably close.

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-lut_viridis.fs

precision mediump float; varying vec2 tex; uniform sampler2D video; vec3 viridis(float t) { const vec3 c0 = vec3(0.2777273272234177, 0.005407344544966578, 0.3340998053353061); const vec3 c1 = vec3(0.1050930431085774, 1.404613529898575, 1.384590162594685); const vec3 c2 = vec3(-0.3308618287255563, 0.214847559468213, 0.09509516302823659); const vec3 c3 = vec3(-4.634230498983486, -5.799100973351585, -19.33244095627987); const vec3 c4 = vec3(6.228269936347081, 14.17993336680509, 56.69055260068105); const vec3 c5 = vec3(4.776384997670288, -13.74514537774601, -65.35303263337234); const vec3 c6 = vec3(-5.435455855934631, 4.645852612178535, 26.3124352495832); return c0+t*(c1+t*(c2+t*(c3+t*(c4+t*(c5+t*c6))))); } void main(void) { float videoColor = texture2D(video, tex).r; gl_FragColor = vec4(viridis(videoColor), 1.0); }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

The resulting shader contains the polynomial in Horner’s method and performs a bunch of Multiply-Adds c0+t*(c1+t*(c2+t*(c3+t*(c4+t*(c5+t*c6))))); to get the color, instead of the texture lookup. This is a prime candidate for being optimized into a few Fused Multiply-Add (FMA) instructions. Even considering theoretical details, this is as good as it gets. Whether or not this is actually faster than a LUT though, is difficult to judge without deep platform specific analysis.

Saves you from handling the LUT texture, quite the time saver!

Diversity for Zombies #

Let’s take a look at how far this technique can be stretched. This time we are looking at the sequel Left 4 Dead 2. Here is Bronwen Grimes explaining how Valve Software achieved different color variations of different zombie parts, which simple tinting couldn’t deliver well enough, with colors missing luminance variety.

Source: Excerpt from "Shading a Bigger, Better Sequel: Techniques in Left 4 Dead 2"

GDC 2010 talk by Bronwen Grimes

The resulting variations can be seen in the following screenshot. The most important feature being the ability to create both a bright & dark shade of suit from one texture.

Source: Excerpt from "Shading a Bigger, Better Sequel: Techniques in Left 4 Dead 2"

GDC 2010 talk by Bronwen Grimes

With just a couple of LUTs chosen at random for skin and clothes, the following color variations are achieved. Fitting colorramps were chosen by artists and included in the final game. This is the part I find so remarkable - How such a simple technique was leveled up to bring so much value to the visual experience. All at the cost of a simple texture read.

Source: Excerpt from "Shading a Bigger, Better Sequel: Techniques in Left 4 Dead 2"

GDC 2010 talk by Bronwen Grimes

Checkout the full talk on the GDC page, if you are interested in such techniques.

The creativity of them using "Exclusive Masking" blew me away. First time I learned about it. Two textures in one channel, set to specific ranges

(Texture 1: 0-128, Texture 2: 128-256) at the cost of color precision

Precalculating calculations #

One more use for 1D LUTs in graphics programming is to cache expensive calculations. One such example is Gamma correction, especially if the standard conform sRGB piece-wise curve instead of the Gamma 2.2 approximation is required.

Unless we talk about various approximations, gamma correction requires the use of the function pow(), which especially on older GPUs is a very expensive instruction. Add to that a branching path, if the piece-wise curve is needed. Or even worse, if you had to contend with the bananas level awful 4-segment piece-wise approximation the Xbox 360 uses. Precalculating that into a 1D LUT skips such per-pixel calculations.

At the bottom of the LUT collection select box in chapter So many colors, I included two gamma ramps for reference. Gamma 2.2 and inverse of Gamma 2.2. For this example: 1D vector in, 1D vector out, but you can also output up to 4D vectors with a 1D LUT, as we have 4 color channels. Whether or not there is benefit from accelerating gamma transformations via 1D LUTs is a question only answerable via benchmarking, but you could imagine other calculations, that would definitely benefit.

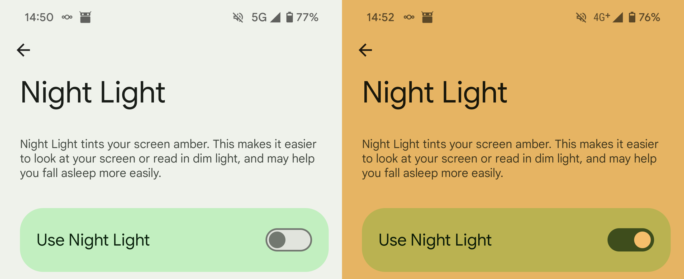

An example of this in the wild is tinting the monitor orange during night time to prevent eye-strain, performed by Software like Redshift. This works by changing the Gamma Ramp, a 1D LUT each for the Red, Green and Blue channel of the monitor. To do so it precalculates the Kelvin Warmth -> RGB and additional Gamma calculations by generating 3 1D LUTs, as seen in Redshift’s source code.

The approach of Redshift and similar pieces of software is pretty awesome with its truly zero performance impact, as the remapping is done by the monitor, not the graphics card. Though support for this hardware interface is pretty horrible across the board these days and more often than not broken or unimplemented, with graphics stacks like the one of the Raspberry Pi working backwards and losing support with newer updates. Microsoft even warns developers not to use that Gamma Hardware API with a warning box longer than the API documentation itself.

Quite the sad state for a solution this elegant. A sign of the times, with hardware support deemed too shaky and more features becoming software filters.

The powerful 3D LUT #

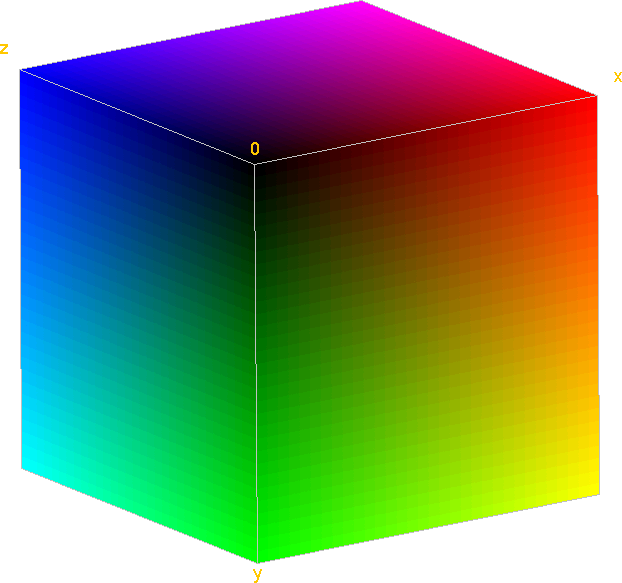

Let’s go 3D! The basic idea is that we represent the entire RGB space in one cube remapping all possible colors, loaded and sampled as a 3D texture. As before, by modifying the LUT, we modify the mapping of the colors. 3D vector in, 3D vector out

You can change color balance with a 1D LUT for Red, Green and Blue. So what a 3D LUT can, that 3 1D LUTs cannot, isn't so obvious. 3D LUT cubes are needed for changes requiring a combination of RGB as input, like changes to saturation, hue, specific colors or to perform color isolation.

Again, the LUT can be any size, but typically a cube is used. Typically it is saved as a strip or square containing the cube, separated as planes for use in video games or as an “Iridas/Adobe” .cube file, which video editors use. Here is the 32³px cube as a strip.

We can load these planes as a 32³px cube and display it in 3D as voxels.

Since it’s in 3D, we only see the outer most voxels. We map the Red to X, Green to Y and Blue to Z, since this is identical to the mapping on graphics cards. You may have noticed the origin being in the top left. This is due to DirectX having the texture coordinate origin in the top left, as opposed to OpenGL, which has its origin in the bottom left. Generally the DirectX layout is the unofficial standard, though nothing prevents you from flipping it.

This is the reason why screenshots from OpenGL are sometimes vertically flipped, when handled by tools expecting DirectX layout and vice versa. Many libraries have a switch to handle that.

Setup #

We’ll be using this footage shot on the Panasonic GH6. It is shot in its Panasonic V-Log color profile (what a horrible name, not to be confused with a vlog), a logarithmic profile retaining more dynamic range and most importantly, having a rigid definition of both Gamut and Gamma, compatible with conversions to other color profiles. Unprocessed, it looks very washed out and very boring.

You may substitute your own footage, though the examples don’t make much sense outside of V-Log color profile footage.

And now we load the footage again into WebGL and process it with a 3D LUT in its initial state, meaning visually there should be no changes.

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-3Dlut.fs

precision mediump float; varying vec2 tex; uniform sampler2D video; uniform sampler2D lut; /* 3D Texture Lookup from https://github.com/WebGLSamples/WebGLSamples.github.io/blob/master/color-adjust/color-adjust.html */ vec4 sampleAs3DTexture(sampler2D tex, vec3 texCoord, float size) { float sliceSize = 1.0 / size; // space of 1 slice float slicePixelSize = sliceSize / size; // space of 1 pixel float width = size - 1.0; float sliceInnerSize = slicePixelSize * width; // space of size pixels float zSlice0 = floor(texCoord.z * width); float zSlice1 = min(zSlice0 + 1.0, width); float xOffset = slicePixelSize * 0.5 + texCoord.x * sliceInnerSize; float yRange = (texCoord.y * width + 0.5) / size; float s0 = xOffset + (zSlice0 * sliceSize); float s1 = xOffset + (zSlice1 * sliceSize); vec4 slice0Color = texture2D(tex, vec2(s0, yRange)); vec4 slice1Color = texture2D(tex, vec2(s1, yRange)); float zOffset = mod(texCoord.z * width, 1.0); return mix(slice0Color, slice1Color, zOffset); } void main(void) { vec3 videoColor = texture2D(video, tex).rgb; vec4 correctedColor = sampleAs3DTexture(lut, videoColor, 32.0); gl_FragColor = correctedColor; }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

One technical detail is that for compatibility I’m using WebGL 1.0, so 3D Textures are not supported. We have to implement a 3D texture read, by performing two 2D texture reads and blending between them. This is a fairly well know problem with one typical solution and line by line explanation provided in this Google I/O 2011 Talk by Gregg Tavares, which this article by webglfundamentals.org is based on.

Unfortunately, that code contains a mistake around Z-Axis calculation of the cube, shifting the colors blue, a mistake corrected in 2019. So if you want to perform the same backwards compatibility to WebGL 1.0, OpenGLES 2 or OpenGL 2.1 without the OES_texture_3D extension, make sure you copy the most recent version, as used here.

Simple corrections #

As with the 1D LUT, any correction we apply to the LUT will be applied to the footage or graphics scene we use. In the following example I imported my footage and LUT into DaVinci Resolve. I applied Panasonic’s “V-Log to V-709 3D-LUT”, which transforms the footage into what Panasonic considers a pleasing standard look. Then a bit of contrast and white point correction to make white full-bright were applied. Afterwards the LUT was exported again. This LUT and its result are shown below.

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-3Dlut.fs

precision mediump float; varying vec2 tex; uniform sampler2D video; uniform sampler2D lut; /* 3D Texture Lookup from https://github.com/WebGLSamples/WebGLSamples.github.io/blob/master/color-adjust/color-adjust.html */ vec4 sampleAs3DTexture(sampler2D tex, vec3 texCoord, float size) { float sliceSize = 1.0 / size; // space of 1 slice float slicePixelSize = sliceSize / size; // space of 1 pixel float width = size - 1.0; float sliceInnerSize = slicePixelSize * width; // space of size pixels float zSlice0 = floor(texCoord.z * width); float zSlice1 = min(zSlice0 + 1.0, width); float xOffset = slicePixelSize * 0.5 + texCoord.x * sliceInnerSize; float yRange = (texCoord.y * width + 0.5) / size; float s0 = xOffset + (zSlice0 * sliceSize); float s1 = xOffset + (zSlice1 * sliceSize); vec4 slice0Color = texture2D(tex, vec2(s0, yRange)); vec4 slice1Color = texture2D(tex, vec2(s1, yRange)); float zOffset = mod(texCoord.z * width, 1.0); return mix(slice0Color, slice1Color, zOffset); } void main(void) { vec3 videoColor = texture2D(video, tex).rgb; vec4 correctedColor = sampleAs3DTexture(lut, videoColor, 32.0); gl_FragColor = correctedColor; }WebGL Javascript fullscreen-tri.js

"use strict"; /* Helpers */ function createAndCompileShader(gl, type, source, canvas) { const shader = gl.createShader(type); const element = document.getElementById(source); let shaderSource; if (element.tagName === 'SCRIPT') shaderSource = element.text; else shaderSource = ace.edit(source).getValue(); gl.shaderSource(shader, shaderSource); gl.compileShader(shader); if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) displayErrorMessage(canvas, gl.getShaderInfoLog(shader)); else displayErrorMessage(canvas, ""); return shader; } function displayErrorMessage(canvas, message) { let errorElement = canvas.nextSibling; const hasErrorElement = errorElement && errorElement.tagName === 'PRE'; if (message) { if (!hasErrorElement) { errorElement = document.createElement('pre'); errorElement.style.color = 'red'; canvas.parentNode.insertBefore(errorElement, canvas.nextSibling); } errorElement.textContent = `Shader Compilation Error: ${message}`; canvas.style.display = 'none'; errorElement.style.display = 'block'; } else { if (hasErrorElement) errorElement.style.display = 'none'; canvas.style.display = 'block'; } } function setupTexture(gl, target, source) { gl.deleteTexture(target); target = gl.createTexture(); gl.bindTexture(gl.TEXTURE_2D, target); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR); /* Technically we can prepare the Black and White video as mono H.264 and upload just one channel, but keep it simple for the blog post */ gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB, gl.RGB, gl.UNSIGNED_BYTE, source); return target; } function setupTri(canvasId, vertexId, fragmentId, videoId, lut, lutselect, buttonId) { /* Init */ const canvas = document.getElementById(canvasId); const gl = canvas.getContext('webgl', { preserveDrawingBuffer: false }); const lutImg = document.getElementById(lut); let lutTexture, videoTexture, shaderProgram; /* Shaders */ function initializeShaders() { const vertexShader = createAndCompileShader(gl, gl.VERTEX_SHADER, vertexId, canvas); const fragmentShader = createAndCompileShader(gl, gl.FRAGMENT_SHADER, fragmentId, canvas); shaderProgram = gl.createProgram(); gl.attachShader(shaderProgram, vertexShader); gl.attachShader(shaderProgram, fragmentShader); gl.linkProgram(shaderProgram); /* Clean-up */ gl.detachShader(shaderProgram, vertexShader); gl.detachShader(shaderProgram, fragmentShader); gl.deleteShader(vertexShader); gl.deleteShader(fragmentShader); gl.useProgram(shaderProgram); } initializeShaders(); const lutTextureLocation = gl.getUniformLocation(shaderProgram, "lut"); if (buttonId) { const button = document.getElementById(buttonId); button.addEventListener('click', function () { if (shaderProgram) gl.deleteProgram(shaderProgram); initializeShaders(); }); } /* Video Setup */ let video = document.getElementById(videoId); let videoTextureInitialized = false; let lutTextureInitialized = false; function updateTextures() { if (!video) { /* Fight reload issues */ video = document.getElementById(videoId); } if (video && video.paused && video.readyState >= 4) { /* Fighting battery optimizations */ video.loop = true; video.muted = true; video.playsinline = true; video.play(); } if (lut && lutImg.naturalWidth && !lutTextureInitialized) { lutTexture = setupTexture(gl, lutTexture, lutImg); lutTextureInitialized = true; } gl.activeTexture(gl.TEXTURE0); if (video.readyState >= video.HAVE_CURRENT_DATA) { if (!videoTextureInitialized || video.videoWidth !== canvas.width || video.videoHeight !== canvas.height) { videoTexture = setupTexture(gl, videoTexture, video); canvas.width = video.videoWidth; canvas.height = video.videoHeight; videoTextureInitialized = true; } /* Update without recreation */ gl.bindTexture(gl.TEXTURE_2D, videoTexture); gl.texSubImage2D(gl.TEXTURE_2D, 0, 0, 0, gl.RGB, gl.UNSIGNED_BYTE, video); if (lut) { gl.activeTexture(gl.TEXTURE1); gl.bindTexture(gl.TEXTURE_2D, lutTexture); gl.uniform1i(lutTextureLocation, 1); } } } if (lutselect) { const lutSelectElement = document.getElementById(lutselect); if (lutSelectElement) { lutSelectElement.addEventListener('change', function () { /* Select Box */ if (lutSelectElement.tagName === 'SELECT') { const newPath = lutSelectElement.value; lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = newPath; } /* Input box */ else if (lutSelectElement.tagName === 'INPUT' && lutSelectElement.type === 'file') { const file = lutSelectElement.files[0]; if (file) { const reader = new FileReader(); reader.onload = function (e) { lutImg.onload = function () { lutTextureInitialized = false; }; lutImg.src = e.target.result; }; reader.readAsDataURL(file); } } }); } } /* Vertex Buffer with a Fullscreen Triangle */ /* Position and UV coordinates */ const unitTri = new Float32Array([ -1.0, 3.0, 0.0, -1.0, -1.0, -1.0, 0.0, 1.0, 3.0, -1.0, 2.0, 1.0 ]); const vertex_buffer = gl.createBuffer(); gl.bindBuffer(gl.ARRAY_BUFFER, vertex_buffer); gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(unitTri), gl.STATIC_DRAW); const vtx = gl.getAttribLocation(shaderProgram, "vtx"); gl.enableVertexAttribArray(vtx); gl.vertexAttribPointer(vtx, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 0); const texCoord = gl.getAttribLocation(shaderProgram, "UVs"); gl.enableVertexAttribArray(texCoord); gl.vertexAttribPointer(texCoord, 2, gl.FLOAT, false, 4 * Float32Array.BYTES_PER_ELEMENT, 2 * Float32Array.BYTES_PER_ELEMENT); function redraw() { updateTextures(); gl.viewport(0, 0, canvas.width, canvas.height); gl.drawArrays(gl.TRIANGLES, 0, 3); } let isRendering = false; function renderLoop() { redraw(); if (isRendering) { requestAnimationFrame(renderLoop); } } function handleIntersection(entries) { entries.forEach(entry => { if (entry.isIntersecting) { if (!isRendering) { isRendering = true; renderLoop(); } } else { isRendering = false; videoTextureInitialized = false; gl.deleteTexture(videoTexture); } }); } let observer = new IntersectionObserver(handleIntersection); observer.observe(canvas); };

With the above two buttons you can also download the clean LUT, screenshot the uncorrected footage in the “Setup chapter” and apply your own corrections. The upload LUT button allows you to replace the LUT and see the result. Be aware, that the LUT has to maintain the exact same 1024px x 32px size and remain a 32³px cube.

Just to clarify, the used video is still the original! DaVinci Resolve exported a LUT, not a video. The full color correction is happening right now.

Left 4 Dead’s use of 3D LUTs #

Using 3D LUTs to style your game’s colors via outside tools is a very well known workflow and standard across the video game industry, as opposed to the previous and game specific 1D LUT use for introducing variation in skin and clothes. The way it works is:

- take a screenshot of the scene you want to color correct

- open it and an initialized 3D LUT in Photoshop or similar photo editing software

- Apply your color corrections, to both the screenshot and LUT at the same time

- crop out and export the 3D LUT

Continuing the use of Left 4 Dead as an example, Left 4 Dead does exactly the same. Here is a tutorial walking you through the process for Left 4 Dead 2 specifically.

You can use any color correction tool of Photoshop freely. The only limitation is that you may not use any filters influencing the relation of multiple pixels. So all convolutions like blur, sharpen, emboss, etc., cannot be used. Or rather, they will lead to unexpected results by blurring the remapped colors.

Advanced Adventures #

But we aren’t just limited to simple corrections. In-depth video editing and color grading suites like DaVinci Resolve allow you to implement complicated color transforms and color grades and export those as 3D LUTs. This field is so incredibly complicated, that it’s far beyond practical to implement these yourself.

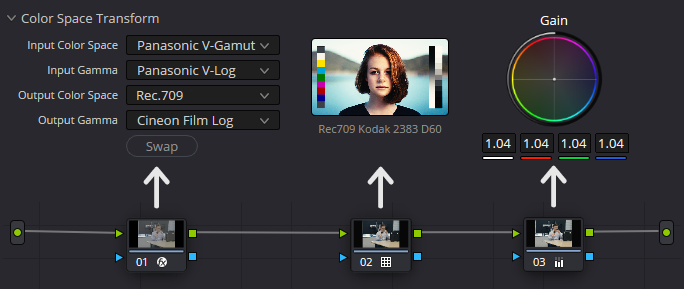

Here is a color grading node network applying the filmic look “Kodak 2383”, a LUT aimed at achieving a certain film print look. To do so, we need to transform our V-Log gamma footage into Cineon Film Log and the colors into Rec.709, as mentioned in the Kodak 2383 LUT itself, see below. Afterwards we can apply the film emulation on top, which also transforms our gamma back into Rec.709. Finally we adjust the white point, so white is actually full bright.

Rec709 Kodak 2383 D60.cube

# Resolve Film Look LUT

# Input: Cineon Log

# : floating point data (range 0.0 - 1.0)

# Output: Kodak 2383 film stock 'look' with D60 White Point

# : floating point data (range 0.0 - 1.0)

# Display: ITU-Rec.709, Gamma 2.4

LUT_3D_SIZE 33

LUT_3D_INPUT_RANGE 0.0 1.0

0.026979 0.027936 0.031946

0.028308 0.028680 0.033007

0.029028 0.029349 0.034061

0.032303 0.030119 0.034824

0.038008 0.031120 0.035123

< Cut-off for brevity >

This is a very complex set of details to get right and we get it all baked into one simple LUT. Below is the resulting LUT of this transformation process. Even if you don’t like the result stylistically, this is about unlocking the potential of a heavy-weight color grading suite for use in your graphical applications.

Screenshot, in case WebGL doesn't work

WebGL Vertex Shader fullscreen-tri.vs

attribute vec2 vtx; attribute vec2 UVs; varying vec2 tex; void main() { tex = UVs; gl_Position = vec4(vtx, 0.0, 1.0); }WebGL Fragment Shader video-3Dlut.fs

precision mediump float; varying vec2 tex; uniform sampler2D video; uniform sampler2D lut; /* 3D Texture Lookup from https://github.com/WebGLSamples/WebGLSamples.github.io/blob/master/color-adjust/color-adjust.html */ vec4 sampleAs3DTexture(sampler2D tex, vec3 texCoord, float size) { float sliceSize = 1.0 / size; // space of 1 slice float slicePixelSize = sliceSize / size; // space of 1 pixel float width = size - 1.0; float sliceInnerSize = slicePixelSize * width; // space of size pixels float zSlice0 = floor(texCoord.z * width); float zSlice1 = min(zSlice0 + 1.0, width); float xOffset = slicePixelSize * 0.5 + texCoord.x * sliceInnerSize; float yRange = (texCoord.y * width + 0.5) / size; float s0 = xOffset + (zSlice0 * sliceSize); float s1 = xOffset + (zSlice1 * sliceSize); vec4 slice0Color = texture2D(tex, vec2(s0, yRange)); vec4 slice1Color = texture2D(tex, vec2(s1, yRange)); float zOffset = mod(texCoord.z * width, 1.0); return mix(slice0Color, slice1Color, zOffset); } void main(void) { vec3 videoColor = texture2D(video, tex).rgb; vec4 correctedColor = sampleAs3DTexture(lut, videoColor, 32.0); gl_FragColor = correctedColor; }WebGL Javascript fullscreen-tri.js