It’s quite the tradition among Japanese learners to publish parts of their Anki Mining decks, so others may get inspired by them or straight up use them. This ~1000 card deck is an excerpt of my Mining deck, which was/is being created in-part from the video game Genshin Impact.

Mining as defined by animecards: A mining deck is a custom Anki deck that you create using the Japanese you encounter daily. Transitioning from pre-made decks to custom decks is vital. Don’t get stuck on pre-made decks. At this point, you’re learning directly from material relevant to you and targeting gaps in your knowledge.

Link to the deck on Ankiweb. All cards have in-game sound + screenshot and almost all have additionally a dictionary sound file + pitch accent. This post will go into the thought process behind the deck, how it was created and has sound clips below every screenshot for reference.

Of course, using someone else's Mining deck defeats the point of a mining deck, so this article is mainly to just document my workflow and to provide a jumping-off point for others setting up their own.

Why Genshin Impact? #

A couple of things come together to make Genshin quite the enjoyable learning experience. The obvious first: Except minor side quests, all dialogs are voiced and progress sentence by sentence, click by click, as is common in JRPGs and visual novels. This gives enough time to grasp the dialog’s content.

In-game time does not stop during dialogs except in some quests, so sometimes multiple in-game days would pass by, as I grasped the contents of a dialog.

Another point is the writing style. A hotly debated topic in the player-base, is whether or not the addition of Paimon hurts the delivery of the story. The character constantly summarizes events happening and repeats commands or requests, that were given by another character just moments ago during dialog.

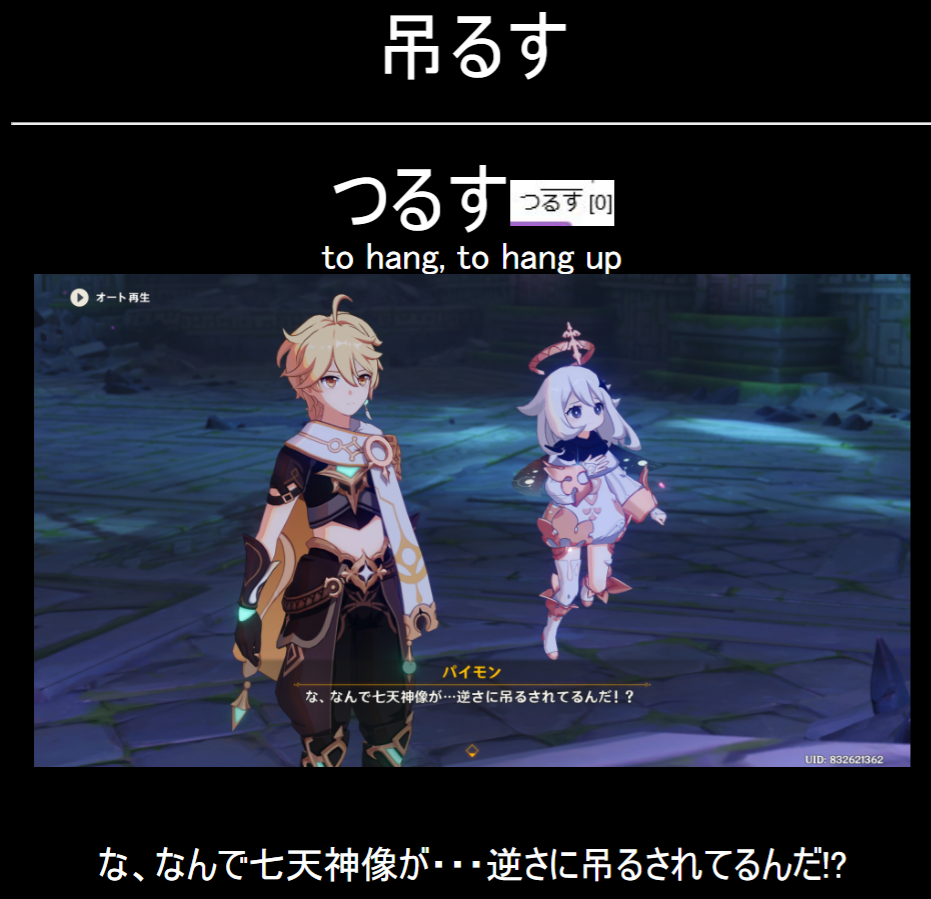

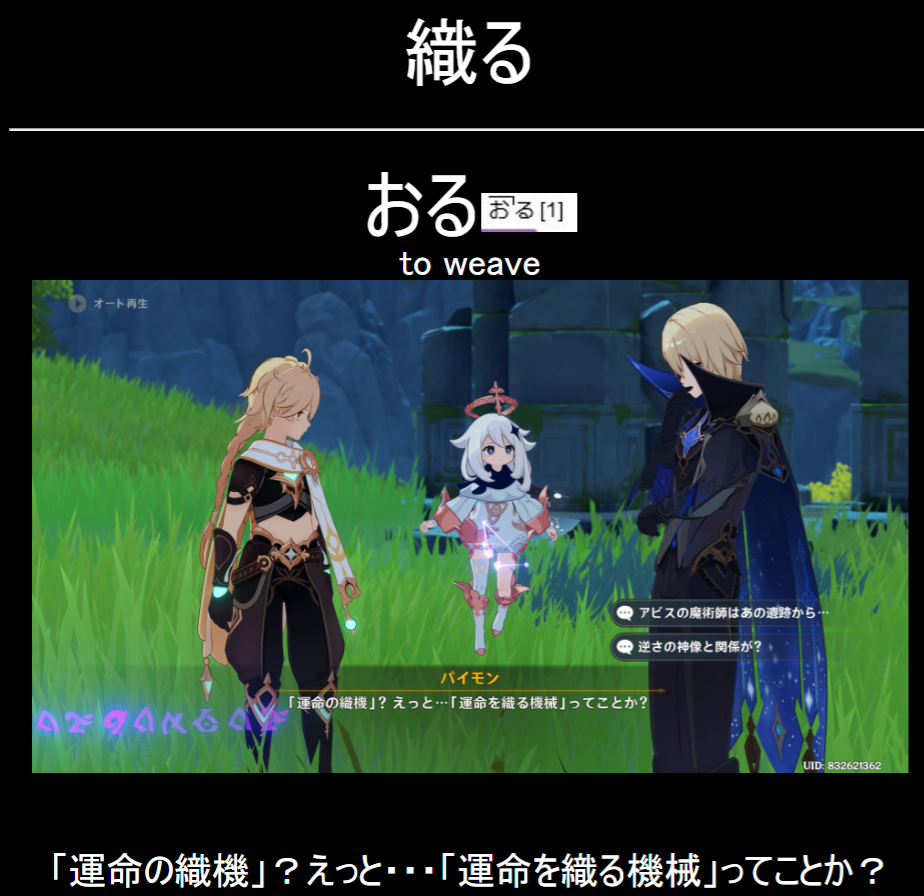

The main criticism often brought up is that this makes the story-flow very child-like, which is a rather obvious design goal of the game - catering to a younger audience. What may be a sore in the eyes of many a player though, is a godsend in the eyes of a language learner. Paimon often describes a situation, which was witnessed by the player mere moments ago, making the actual statement of a sentence trivial to understand.

And sometimes Paimon straight up becomes a dictionary herself and defines a word like a glossary text would.

There is a very dialog- and lore-heavy story-line in Dragonspine involving Albedo, which has some of the most information dense dialog of the game. Paimon often commented, how she completely lost the plot and didn’t understand anything. During that story line I felt as if Paimon was sympathizing with me, as I battled my way to understanding that story-line.

For me, Paimon really made this game shine as a learning tool.

The good outcome #

I’m constantly surprised how much Genshin has propelled my speech forward. Similar to a movie you can quote decades later or video game moments being etched into memory.

There is something about the media we consume, that makes it stick.

I found myself using and recalling vocabulary acquired via Genshin faster than from other sources. Or maybe it’s just that video games make you engage with their world and characters for far longer and with much more intensity, than other forms of indirect-study could.

The dangerous outcome #

It’s common knowledge that media uses artistic delivery in speech, has speech patterns rarely used in everyday life and uses a stylized way of writing. Basically all of it is 役割語. Knowing that full well I still managed to trip up. Case in point:

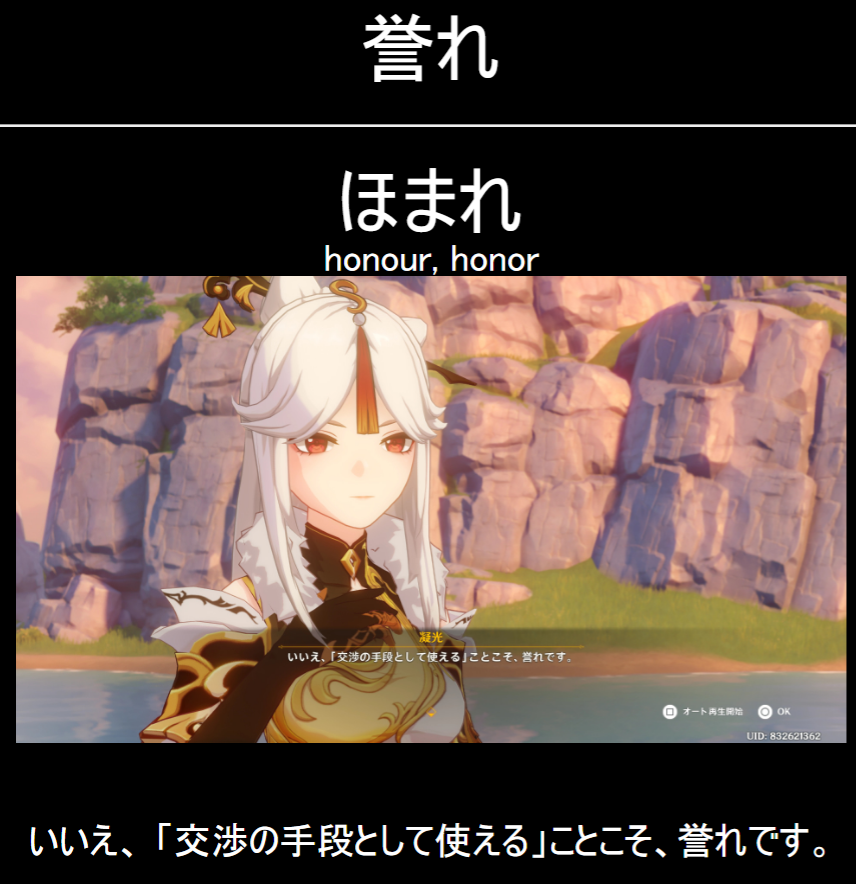

I used the 誉れです expression instinctively from time to time and until someone noted, that this expression has a rather archaic, regal tone. It was quite the funny situation, but it goes to show, that even knowing what kind of media I was consuming still didn’t save me from tripping up.

Coming from outside the language it’s unavoidable to misinterpret an expression’s nuance I guess. Though in this case, the in-game dialog should have really tipped me off, as the character speaking, Ninguang, uses it to tell a joke with a sarcastic undertone.

Info and Structure #

Since this is a mining vocabulary deck, it carries words *I* did not know during in-game dialog. Let’s pin-point the range of words in the deck by referencing others.

- I already finished the Improved Core3k deck, so there are zero duplicates between this deck and Core3k. Besides that, I started the deck shortly before my JLPT N4 exam and am now

N3N2. Words in the deck are essentially N3 and up, with some easy ones sprinkled in. No in-universe words are saved, like モラ or 目狩り令.- The AnkiWeb Account who authored Improved Core3k is gone and thus only the archive is linked. The closet comparison is Core 2.3k Version 3. So no duplicates between Core 2.3k Version 3 and this deck.

By the way, Core series of decks loosely represent the most used words by frequency. Core3k represents the top 3000 Japanese words.

- The deck captures the main story-line and a few side-quests / story-quests from the beginning up to Inzuma’s second chapter.

- I always learn both Japanese -> English, as well as English -> Japanese. This point is hotly debated, whether or not it’s useful or a massive waste of time. For me, switching to learning both directions has been nothing but great, but it is not the default on Anki Web and not a popular opinion it seems. To fit with the default, I have disabled the English -> Japanese Card type.

Personally, if I can name a synonym in the English -> Japanese direction, I still let it pass as HARD and as AGAIN if I can only name a synonym, once the card returns

- For the in-game subtitles OCR sometimes failed. I corrected small mistakes, but when it output complete garbage, I added a cropped version of just the text in the screenshot for the sentence section. I did not always double check the OCR output, so mistakes will come up in the in-game dialog’s transcript occasionally. If something looks weird with the example sentences, check the in-game screenshot for the correct transcript.

- To fit inside the AnkiWeb limit of 256 MB, all images were resized from the 1080p originals to fit a 1366x768 rectangle with aggressive 81% JPEG Quality and in-game dialog are mono MP3 files.

- When I write “here: …”, I am referring to a word being used in a more specialized sense in the in-game dialog, like 人目 vs 人目を忍ぶ. In those cases two definitions are provided. This is to make the learning process a bit more compact and to prevent not being able to translate a sentence, having just half of the definition.

- Characters speaking Kansai dialect have received there own tag “Kansaidialect”.

Grammar #

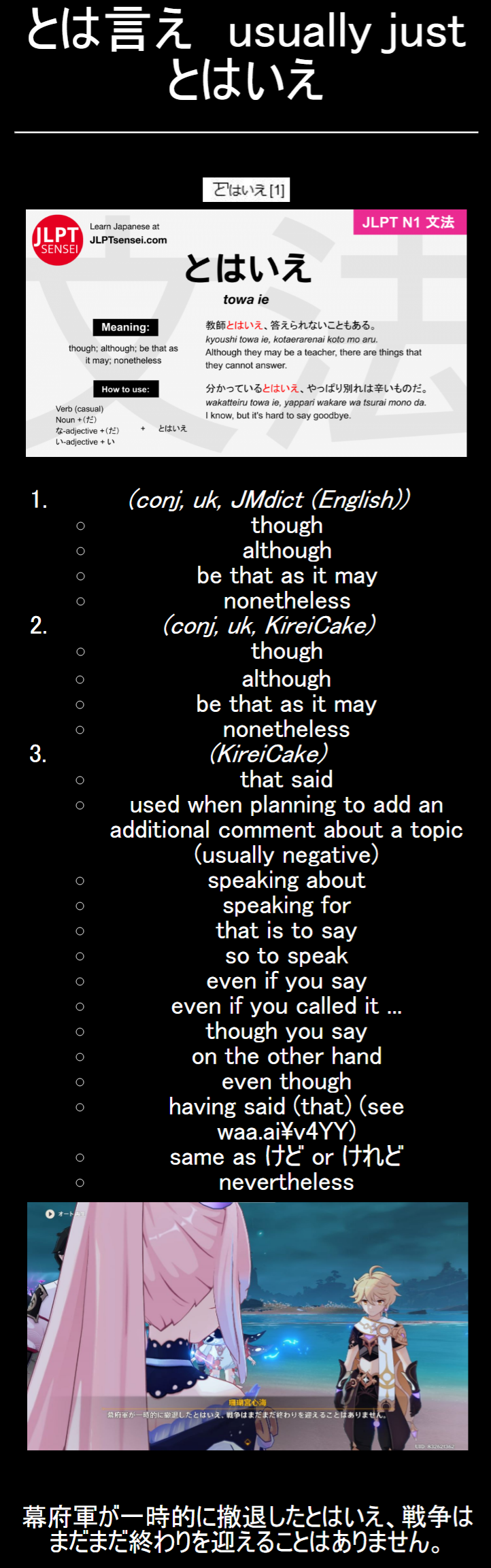

I also have a bunch of grammar cards mixed in, when I encountered new pieces of grammar and recognized it as such. For those I pasted the excellent JLPT Sensei summary images.

A big surprise to me was the YomiTan dictionary “KireiCake” having URL-shortened links from time to time, like waa.ai/v4YY in the above card. In this case it leads to an in-depth discussion on Yahoo about that grammar point. (Archive Link, in case it goes down)

Tid-bits like these… The love and patience of the Japanese learning online community is truly magnificent. From /djt/ threads on image-boards to user-scripts connecting Kanji learn services to a collection of example recordings from Anime.

This dedication to help each other out like that has me in awe.

To translate or not to translate #

In the beginning I did toggle to English to screenshot the English version for the card’s back-side, see the example card below. However, on recommendation from members of the English-Japanese Language Exchange discord server, I stopped doing so. Mainly, because of localization discrepancies between both versions.

Differences got especially heavy, when more stylized dialogue got involved. But also in part, because this is not recommended for mining in general. Quoting from the Core3k description:

Don’t use the field ‘Sentence-English’ in your mined cards. In fact, get rid of it once you have a solid understanding of Japanese. When you mine something you should already have understood the sentence using the additional information on your cards.

How it was captured #

If you are completly new to the Mining workflow, check out AnimeCards.site before jumping into my specific workflow.

The main workhorse of everything is Game2Text, though the setup in the case of Genshin is not straightforward. Game2Text is a locally run server, that opens as localhost in your browser. Game2Text then allows you to combine a couple of things:

Capture a window’s content via FireFox’s and Chrome’s native window capture feature, run a region of the window through OCR, like the offline and opensource tesseract or the more powerful online service ocr.Space, and finally allow you to translate the text with help of popular plug-ins like YomiChan or the new active community fork YomiTan.

Game2Text has native Anki Connect integration, which builds Anki cards from the captured screenshot, the currently selected word and a dictionary definiton. However, this native Anki integration sometimes fails to recognize phrases, that more complex dictionary suites in YomiChan detect. Luckily Game2Text can essentially give you the best of both worlds, since you can just use directly YomiChan to create a card.

Though in that case, you have to handle screenshots yourself.

Genshin fails to get captured by the browser, unless it is in window mode. There is no proper borderless window mode in Genshin unfortunately, unless you use a patcher to get a borderless window. Another option is to run OBS in administrator mode, capture the game, open up a full-screen “source monitor”-window of Genshin’s source signal and let the browser capture that.

This is the option I went with on my Desktop PC.

On my Laptop with a dedicated Nvidia GPU this leads to massive performance loss however, presumably because of saturating memory bandwidth due to some weird interaction between the iGPU and the main GPU causing a memory bottleneck. In that case I used the borderless gaming patcher.

If Game2Text has a hook-script for the game in question, then it can hook into the game’s memory and read out the dialog strings, forgoing the sometimes imprecise OCR. No such hook-script exists for Genshin Impact. I tried to create one by poking around with CheatEngine, but there was no obvious strings-block in memory. I decided to drop that approach due to the worry of having my account banned.

If OCR is imprecise, there is always the onscreen dialog box to check against.

Handling Audio #

Across multiple systems, Game2Text fails to create a card with sound for me though. It successfully captures sound in .wav files, but transcodes them to fully silent .mp3 files, which it attaches to the cards. So instead of working with the .wav files, I simply let Audacity capture the sound output via its Windows WASAPI mode and the thus unlocked speaker loopback record method in a non-stop recording. On dialog I would select the needed passage and via a macro bound to a hot-key, perform the conversion to mono, normalization and export to an mp3 file.

Originally, I set all audio to be normalized based on setting the peak sample to -3db via Audacity. This turned out to be not quite optimal, as the amount of voice profiles is very broad. With peak sample normalization bright and dark voices did not end up playing back at the same loudness, since it does not account for human hearing being more sensitive to some frequencies compared others.

I batch-reencoded every audio file to be normalized to -15LUFS loudness instead, the more modern approach. (Conversion workflow explained here) Although the difference was subtle, the dialogue sounded a bit more balanced from card to card after that.

It would be optimal to have no music mixed in with the dialog for the sake of cleaner dialog sound during card reviews. However, the music is so incredibly good, that it would have not been even half as enjoyable to go through the game without the music. So often background music plays with the cards.

Worst offender being Liyue Harbor's background track, which manages to drown out dialog during its crescendo.

The actual workflow #

When I did not recognize a word, I would tab out of the game, select the rectangle in Game2Text to get the transcript. I would then use YomiChan to aid me in understanding the sentence. When the “logs” screen of Game2Text properly recognized the phrase in question, I would then use it to create the card.

When not, I would use YomiChan and manually paste the screenshot. Also, I screenshot YomiChan’s PitchAccent display and pasted it into the reading field. Finally, I would tab into audacity, select the needed passage, press the hotkey to export the sound as an mp3 into a folder I had open and Drag&Drop to the current Anki card.

Sometimes, there was no dictionary sound reading in the Game2Text log screen, but in YomiChan - or vice-versa. In that case I would play the dictionary sound from the other source, let Audacity capture it and again export that sound passage. (You can get the sound file directly but this was faster)

Finally, if none of the two sources had a dictionary reading, I would manually check the JapanesePod101 dictionary, which surprisingly has a ton of obscure vocab readings as sound files. Just make sure to un-tick ‘most common 20.000 words’, tick ‘Include vulgar words’ and switch the mode from ‘ls’ to ‘Starts with’ to find them.

This concludes my little write-up about the Genshin Impact part of my Anki mining deck. Hope you found it useful.